Opportunities of various motion planning approaches.

1. Introduction

Dynamic agents enclose an extensive range of ventities covering mobile robots, autonomous vehicles, drones, unmanned aerial vehicles (UAVs), unmanned ground vehicles (UGVs), unmanned underwater vehicles (UUVs), and other objects in motion. An integral aspect in the development of these agents, especially the autonomous ones, is to devise a course of action enabling them to make their plans in various situations. Motion planning is the computational process of navigating the above-mentioned entities from one to another place while avoiding collisions, optimizing for assessment criteria, and adapting to varying conditions. It generates a feasible and safe trace to follow and enables efficient interactions between dynamic agents and their environment [1, 2]. This introductory chapter conceptualizes motion planning and brings insights into the various motion planning approaches for dynamic agents along with their applications, significance, and existing challenges that require future considerations and future potential. This chapter emphasizes the integral role of motion planning in shaping the future of robotics and autonomous systems.

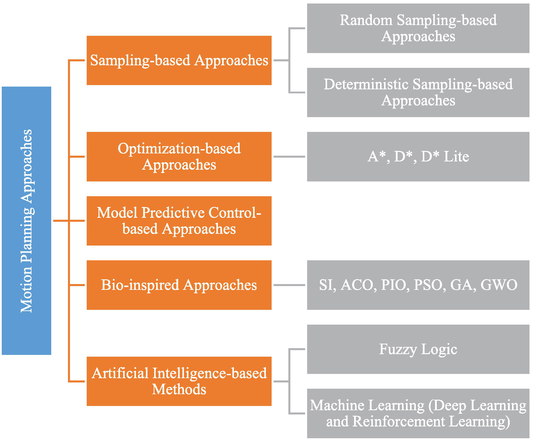

Motion planning is comprised of two components namely path planning and trajectory planning. The path refers to a sequence of configuration vectors considering independent attributes like position, orientation, linear or angular velocity, steering angle, acceleration, and many others. A trajectory is an order of spatio-temporal states, feasible for agent dynamics, in free space. First, the trajectory planner generates an array of feasible and collision-free trajectories then, it selects an optimal one founded on cost function optimization. These components ensure safe and efficient motion, especially in complex, unknown, and ever-changing environments. Therefore, motion planning is a crucial aspect of robotics and automation [3]. Motion planning approaches are applied according to the application and its requirements for dynamic agents. Some widely employed approaches are sampling-based, optimization-based, model predictive control (MPC), bio-inspired, and artificial intelligence (AI)-based approaches as illustrated in Figure 1. These methods offer opportunities, however, face some restrictions [4].

Figure 1.

Classification of motion planning approaches.

The first category is sampling-based approaches that are computationally efficient and operative in high-dimensional spaces. These methods are further categorized as random and de-randomized or deterministic sampling-based approaches [5]. Algorithms that randomly sample the configuration space for generating feasible paths are referred to as random sampling-based methods. These are successful approaches for robotic motion planning problems. These aim to conduct a probabilistic search that examines the configuration space with independently and identically distributed random samples. This assessment is enabled through a collision detection module, referred to as a “Black Box” in motion planning. Probabilistic roadmaps (PRMs), rapidly exploring random trees (RRTs), its asymptotically optimal version RRT*, fast marching tree algorithm (FMT∗), closed-loop rapidly exploring random tree (CL-RRT), etc., are random sampling-based approaches [6]. On the other hand, deterministic sampling-based algorithms, for instance, physics-based motion planner (PMP). PMP remarkably simplifies the certification process, enables the employment of offline computation, and even in certain cases drastically makes various operations simple [7]. Next are optimization-based approaches that determine the optimal path by reducing a cost function. They are significant for applications where path quality is considered integral [8]. Optimization-based strategies include the A* search algorithm, dynamic A* (D*), and D* Lite. The A* algorithm, developed from a bidirectional search optimization strategy, is fast with a good heuristic and determines an optimal path. The D* algorithm maintains a partial, optimal cost map, and works in unbounded environments. It is memory and computationally efficient. The D* Lite algorithm combines heuristic and incremental search that covers multiple navigation tasks in a dynamic environment. It is easy to understand and analyze [9].

Model predictive control approaches are extensively used as optimal control methods for high-level trajectory resulting in safe and fast motion planning of robots. MPC is well-suited for systems with dynamic and stochastic constraints. It necessitates a system model for optimizing control over finite-time horizons and feasible trajectories recursively. It considers the updating of the environmental state during its planning process. Authors survey and highlight the perspectives of using MPC for motion planning and control of autonomous marine vehicles (AMVs) [10]. Their research shows that MPC is exceptionally successful in enhancing the guidance and control capabilities of AMVs through effective and practical planning. In multi-robot systems, existing motion planning algorithms often lack addressing for poor coordination and increasing low real-time performance. In such cases, model predictive contouring control (MPCC) allows separating the tracking accuracy and productivity for modifying productivity [11]. The MPCC allows every single robot to exchange other robots’ predicted paths while generating collision-free motion parallelly.

Bio-inspired approaches draw inspiration from mechanisms observed in natural systems and the capabilities of dynamic agents to manage dynamic environment complexities [12]. Motion planning based on these approaches offers a pathway to more robust, adaptive, and efficient navigation strategies. Swarm intelligence (SI), particle swarm optimization (PSO), ant colony optimization (ACO), pigeon-inspired optimization (PIO), genetic algorithm (GA), and gray wolf optimizer (GWO) are some bio-inspired approaches. SI leverages the collective behavior of simple agent groups that mimic the coordination of animal swarms. All the robots in the swarm follow local rules leading to intelligent, emergent group behavior [13]. AI-based approaches learn motion policies from data and are gaining more attraction nowadays. These techniques have rapid computation abilities and are widely employed for online planning. AI considers motion planning as a sequential decision-making problem and leverages it with fuzzy logic and machine learning (ML) algorithms covering reinforcement learning (RL) and deep learning (DL) [14]. For instance, ML stimulates the learning behavior and then determines solutions for path planning for fast agent-based robust systems. A deep Q-network (DQN) algorithm is implemented that converges faster and more rapidly learns path-planning solutions for multi-robots [15]. Table 1 displays the advantages of implementing the above-mentioned motion planning approaches.

| References | Type of dynamic robot | Applied approach | Environment | Opportunities |

|---|---|---|---|---|

| [9] | Autonomous vehicles | Nonlinear Model Predictive Control (NMPC)-based motion planning | Experiment | Shortens the prediction horizon. Ensures safety. Achieves improvement in lap time |

| [15] | Multi-robots | DQN | Simulation | Converges faster. More rapidly learns path-planning solutions |

| [16] | Unmanned aircraft systems (UAS) | MPC-RRT# | Simulation | Generates a conceivable trajectory satisfying dynamic constraints |

| [17] | Multiple UAVs | A* | Experiment | Improve success possibility. Generates shorter paths in less time |

| [18] | UAV | Hybrid PSO | Experiment | More feasible and effective in path planning |

Table 1.

The adoption of robotics and automation is increasing in various industries for performing multiple, dangerous, repetitive, and beyond human capabilities tasks. Robots, autonomous vehicles, and drones are deployed from healthcare to surveillance missions. Considering the surveillance missions, communication between a dynamic agent and a control base may be lost [19]. Implementing effective path planning guides the robot preventing it from damage and improving its exploration. In the agriculture sector, autonomous robots and UAVs navigate fields for planting, crop monitoring, spraying fertilizers and pesticides, and even harvesting purposes. In the healthcare setting robots with precise motion planning assist in surgeries, patient care, medicine delivery, and rehabilitation. Considering the manufacturing industry, industrial and service robots implement motion planning for performing various tasks like assembly, pick and pack operations, navigation, and delivery in cluttered environments [20]. Besides these, motion planning enables analysis of various factors such as road conditions, traffic, and weather for self-driving cars. The paramount significance of motion planning is because of several compelling reasons such as safety, efficiency, and adaptability. Navigation of dynamic agents with collision avoidance, obstacle avoidance, and minimal risks ensures the safety of these agents as well as humans and the environment [21]. Motion planning makes autonomous vehicles efficient in generating optimal paths reducing energy consumption, travel time, and operational costs. Furthermore, it allows dynamic agents to adapt and respond in changing and unpredictable environments.

Motion planning faces several challenges due to real-time decision-making, high-dimensional state spaces, uncertainty and complexity, human-robot interactions, and huge data and processing requirements [22]. Quick decision-making for responding to the changing conditions in dynamic and complex environments may affect motion planning. Next is the high-dimensional state space in certain cases which makes feasible path planning computationally intensive. Additionally, uncertainty in sensor measurements as well as environment models must be addressed. More advanced and interconnected systems also increase the complexity of motion planning. Subsequently, environments with robots and humans coexist, therefore, consideration of human intentions and behavior is crucial for safe and natural human-robot interactions. Furthermore, some approaches, especially AI-based methods, require huge amounts of high-quality data for training algorithms [23]. However, collecting, processing, and analyzing data is expensive and difficult which restricts the application of these methods. On the other hand, some approaches lead to undesirable and unpredictable behaviors in complex environments, generating unsafe or suboptimal routes that may lead to accidents. All these challenges must be overcome to harness the full potential of autonomous systems.

The future of dynamic agents looks promising as advancement in research and technology continues to shape its development. Motion planning will play a central role in efficient transportation systems with the integration of advanced sensor technologies, AI, and V2X communication [24]. This will optimize traffic management reduce congestion and enhance public transportation with the coordination of autonomous vehicles. Advanced approaches will facilitate safe human-robot collaborations and interactions that will make collaborative robots or cobots and humanoids more prevalent in various industries. Ongoing research is concentrated on developing more reliable and robust algorithms, like deep reinforcement learning [25]. This will make inroads into motion planning enabling agents to adapt to more complex and uncertain environments while ensuring the safety of dynamic agents.

References

- 1.

Chen M, Herbert SL, Hu H, Ye P, Fisac JF, Bansal S, et al. Fastrack: A modular framework for real-time motion planning and guaranteed safe tracking. IEEE Transactions on Automatic Control. 2021; 66 (12):5861-5876 - 2.

Dong L, He Z, Song C, Sun C. A review of mobile robot motion planning methods: From classical motion planning workflows to reinforcement learning-based architectures. Journal of Systems Engineering and Electronics. 2023; 34 (2):439-459 - 3.

Ali ZA, Zhangang H, Hang WB. Cooperative path planning of multiple UAVs by using max–min ant colony optimization along with cauchy mutant operator. Fluctuation and Noise Letters. 2021; 20 (01):2150002 - 4.

Lin S, Liu A, Wang J, Kong X. A review of path-planning approaches for multiple mobile robots. Machines. 2022; 10 (9):773 - 5.

Thomason W, Knepper RA. A unified sampling-based approach to integrated task and motion planning. In: The International Symposium of Robotics Research. Cham: Springer International Publishing; 2019. pp. 773-788 - 6.

Véras LGDO, Medeiros FLL, Guimaráes LNF. Systematic literature review of sampling process in rapidly exploring random trees. IEEE Access. 2019; 7 :50933-50953 - 7.

Gholamhosseinian A, Seitz J. A comprehensive survey on cooperative intersection management for heterogeneous connected vehicles. IEEE Access. 2022; 10 :7937-7972 - 8.

Ali ZA, Han Z. Path planning of hovercraft using an adaptive ant colony with an artificial potential field algorithm. International Journal of Modelling, Identification and Control. 2021; 39 (4):350-356 - 9.

Vázquez JL, Brühlmeier M, Liniger A, Rupenyan A, Lygeros J. Optimization-based hierarchical motion planning for autonomous racing. In: 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE; 2020. pp. 2397-2403 - 10.

Wei H, Yang S. MPC-based motion planning and control enables smarter and safer autonomous marine vehicles: Perspectives and a tutorial survey. IEEE/CAA Journal of Automatica Sinica. 2022 - 11.

Xin J, Yaoguang Q , Zhang F, Negenborn R. Distributed model predictive contouring control for real-time multi-robot motion planning. Complex System Modeling and Simulation. 2022; 2 (4):273-287 - 12.

Ali ZA, Han Z, Masood RJ. Collective motion and self-organization of a swarm of UAVs: A cluster-based architecture. Sensors. 2021; 21 (11):3820 - 13.

Darvishpoor S, Darvishpour A, Escarcega M, Hassanalian M. Nature-inspired algorithms from oceans to space: A comprehensive review of heuristic and meta-heuristic optimization algorithms and their potential applications in drones. Drones. 2023; 7 (7):427 - 14.

Sun H, Zhang W, Yu R, Zhang Y. Motion planning for mobile robots—Focusing on deep reinforcement learning: A systematic review. IEEE Access. 2021; 9 :69061-69081 - 15.

Yang Y, Juntao L, Lingling P. Multi-robot path planning based on a deep reinforcement learning DQN algorithm. CAAI Transactions on Intelligence Technology. 2020; 5 (3):177-183 - 16.

Primatesta S, Osman A, Rizzo A. MP-RRT#: A model predictive sampling-based motion planning algorithm for unmanned aircraft systems. Journal of Intelligent and Robotic Systems. 2021; 103 :1-13 - 17.

Hu Y, Yao Y, Ren Q , Zhou X. 3D multi-UAV cooperative velocity-aware motion planning. Future Generation Computer Systems. 2020; 102 :762-774 - 18.

Ali ZA, Zhangang H. Multi-unmanned aerial vehicle swarm formation control using hybrid strategy. Transactions of the Institute of Measurement and Control. 2021; 43 (12):2689-2701 - 19.

Queralta JP, Taipalmaa J, Pullinen BC, Sarker VK, Gia TN, Tenhunen H, et al. Collaborative multi-robot systems for search and rescue: Coordination and perception. arXiv preprint arXiv: 2008. 2020:12610 - 20.

Tamizi MG, Yaghoubi M, Najjaran H. A review of recent trend in motion planning of industrial robots. International Journal of Intelligent Robotics and Applications. 2023:1-22 - 21.

Ali ZA, Masroor S, Aamir M. UAV based data gathering in wireless sensor networks. Wireless Personal Communications. 2019; 106 :1801-1811 - 22.

Guo H, Wu F, Qin Y, Li R, Li K, Li K. Recent trends in task and motion planning for robotics: A survey. ACM Computing Surveys. 2023 - 23.

Teng S, Hu X, Deng P, Li B, Li Y, Ai Y, et al. Motion planning for autonomous driving: The state of the art and future perspectives. IEEE Transactions on Intelligent Vehicles. 2023 - 24.

Ali ZA, Israr A, Alkhammash EH, Hadjouni M. A leader-follower formation control of multi-UAVs via an adaptive hybrid controller. Complexity. 2021; 2021 :1-16 - 25.

Aradi S. Survey of deep reinforcement learning for motion planning of autonomous vehicles. IEEE Transactions on Intelligent Transportation Systems. 2020; 23 (2):740-759