Rationales and system behavior for automatically applying directionality in order of increasing complexity.

Abstract

Sensorineural hearing loss is the most common type of permanent hearing loss. Most people with sensorineural hearing loss experience challenges with hearing in noisy situations, and this is the primary reason they seek help for their hearing loss. It also remains an area where hearing aid users often struggle. Directionality is the only hearing aid technology—in addition to amplification—proven to help hearing aid users hear better in noise. It amplifies sounds (sounds of interest) coming from one direction more than sounds (“noise”) coming from other directions, thereby providing a directional benefit. This book chapter describes the hearing-in-noise problem, natural directivity and hearing in noise, directional microphone systems, how directionality is quantified, and its benefits, limitations, and other clinical implications.

Keywords

- hearing-in-noise

- natural directivity

- directional microphone systems

- microphone arrays

- fixed and adaptive arrays

- benefits

- limitations

- and clinical implications

1. Introduction

The inability to hear in noise is a strong driver of help-seeking among people with hearing loss. Although satisfaction with hearing aids is high among users, they are often relatively less satisfied with how well they hear in noisy backgrounds when wearing their hearing aids [1]. The most common solution to help people who use hearing aids to hear better in noise is the use of directional microphone systems, also called simply “directionality”. This technology allows hearing aids to amplify sound from one direction more than sound from other directions and can thereby reduce interfering noise that arises from a different location than the sound of interest. With digital technology, the performance capabilities and automatic control of directionality has increased in complexity and sophistication with the purpose of increasing both benefit and ease of use for the hearing aid user.

2. Why do people with hearing loss have trouble hearing in noise?

People with sensorineural hearing loss (SNHL) have reduced audibility of soft sounds which means their thresholds of hearing are elevated, such that they cannot hear soft sounds that people with normal hearing can. People with mild to even moderate-severe hearing loss are known to report that they have little-to-no difficulty hearing in one-on-one conversations in quieter environments but struggle to understand speech when there are multiple people talking or when in background noise. It is often these increased difficulties hearing in noise or more adverse listening conditions that makes them pursue hearing aids.

SNHL involves damage to the cochlea and often the peripheral neural pathway of the auditory system. Because this system is nonlinear in its behavior, so are the effects of damage on perception of sound. In addition to the loss of hearing sensitivity already mentioned, those with SNHL show effects on how sound above hearing thresholds is perceived, including:

Frequency dependent loss of audibility. SNHL tends to affect high frequencies to a greater degree than lower frequencies. Because the sensation of loudness is dominated by lower frequency sound, some people may not even recognize that they have hearing loss until their hearing loss progresses enough that it impacts more daily listening situations. Many high-frequency consonant sounds are crucial for speech understanding, especially in background noise, and loss of audibility in higher frequencies can have a disproportionate negative impact. (for a review, see Ref. [2]).

Reduced dynamic range of hearing. In SNHL, thresholds of hearing sensitivity are elevated, while levels of discomfort are not correspondingly higher. Therefore, the dynamic range (difference between threshold of hearing and loudness discomfort) is reduced. People with SNHL may not hear soft sounds but may find loud sounds equally as bothersome as those with normal hearing. This is partly because those with hearing loss experience a more rapid growth of loudness compared to people with normal hearing, which is referred to as “loudness recruitment”. How SNHL affects loudness perception is outlined in detail by Moore [3].

Poorer frequency and temporal resolution. The ability to discriminate sounds that are closely spaced in frequency and/or in time is less in persons with SNHL compared to those with normal hearing. This can result in some sounds being “covered up” by others, which contributes to difficulties hearing in noisy environments. There are excellent descriptions of reduced frequency and temporal resolution [3].

Reduced pitch perception. The ability to detect changes in frequency over time is poorer than normal with SNHL. This may impair, for example the ability to perceive pitch patterns of speech, to discern which are the emphasized words in an utterance, and to determine whether what is being said is a question or a statement. Pitch also conveys information about a speaker’s gender, age, and emotional state. This means that people with hearing loss may have more difficulty with perception of these basic, but important aspects of understanding speech and the speaker’s intention. A more detailed review of the negative impact of reduced pitch perception is available [3].

Reduced binaural processing abilities. The ability to integrate, analyze, and compare information received from each ear is poorer with SNHL compared to those with normal hearing, and contributes to difficulties hearing in noisy environments. Differences in frequency, intensity, timing, and phase of information received by each ear in a listening environment provide the brainstem with cues needed to help determine the location a sound is coming from, and to best extract speech out of background noise. These cues are degraded in people with hearing loss. There are thorough overviews of binaural hearing abilities [3].

These effects are all contributors to the experience of difficulties hearing in noise that most people with SNHL report. Perceived difficulties with hearing in noise are also reflected in objective performance measures. SNHL results in poorer speech understanding in noise compared to normal hearing in the same situation. Many speech tests measure percent correct at a given signal-to-noise ratio (SNR). SNR indicates the relationship between the signal of interest e.g., speech, as compared to background noise. It is expressed in decibels (dB), and a SNR of 0 dB indicates that the signal level is equal to the noise level. A positive SNR value indicates that the signal is greater than the noise, and a negative SNR value indicates that the signal is less than the noise. Effectively, the greater the SNR, the easier it is to hear the signal of interest among the noise. Another useful way to quantify performance differences in addition to speech recognition in noise tests is to quantify performance differences by measuring a person’s SNR loss [4]. SNR loss is defined as the increase in SNR required by a person with hearing loss to understand speech in noise, relative to the average SNR required for a person with normal hearing. Research has shown that people with hearing loss may require SNR improvements ranging from 2 to 18 dB, depending on the magnitude of the hearing loss, to hear as well as people with normal hearing under the same listening conditions [3, 4, 5, 6, 7, 8]. The reduced ability to understand speech in noise seems to mainly be caused by decreased audibility for people with mild and mild-moderate hearing losses. For people with severe and profound losses, and for some people with more moderate loss, decreased frequency and temporal resolution seem to play a larger role in their reduced ability to understand speech in noise [2, 3].

SNHL occurs with various degrees and combinations of decreased audibility, reduced dynamic range, deficits in frequency/pitch and temporal resolution, and poor binaural hearing processing. Regardless of the specific deficits for a person with hearing loss, SNHL results in poorer speech understanding compared to normal hearing in the same situation even when the person with hearing loss uses hearing aids. The reduced ability to understand speech in noise seems to mainly be caused by decreased audibility for people with mild and mild-moderate hearing losses. For people with severe and profound losses, and for some people with more moderate loss, decreased frequency and temporal resolution seem to play a larger role in their reduced ability to understand speech in noise [2, 3].

If the hearing loss is not too severe, loss of audibility can be compensated for by hearing aids, because amplification can focus on those frequencies where speech has the softest components e.g., typically the high frequencies, which is likewise where hearing loss is typically the greatest. On the other hand, it is more challenging to compensate for the other auditory processing deficits that cause difficulties hearing in background noise with hearing aids. For example, reduced frequency and temporal resolution degrade important speech cues, effectively decreasing the SNR of the auditory information processed at the auditory periphery before it ascends to the auditory cortex where it is “heard” [2]. All hearing aids can do to minimize the problems caused by reduced frequency resolution is to keep noise from being amplified by the hearing aids as much as possible, and to provide appropriate variation of gain by frequency so that low-frequency parts of speech or noise do not mask the high-frequency parts of speech and so that frequency regions dominated by noise are not louder than frequency regions dominated by speech.

A proven solution to compensate for hearing difficulties in noise beyond what can be obtained with hearing aid amplification is to use directional microphone systems [9, 10]. These work by amplifying sounds coming from one direction more than sounds from other directions, and thereby reducing interfering noise that arises from a different location than the desired sound and providing an SNR benefit. Directionality is the only hearing aid technology, apart from amplification itself, that has been shown to improve speech recognition in noisy situations [9, 10].

3. Natural directivity and hearing in noise

People with normal hearing use naturally provided directional and spatial perception cues to hear in both quiet and noisy environments. It is useful to understand how this is accomplished as directional microphone systems may interfere with, or conversely, attempt to preserve or replicate these natural perceptual advantages.

Listening with two ears (binaural hearing) compared to with only one ear (monaural hearing), has benefits that arise from several monaural and binaural cues that contribute to an improved ability to hear in noise [11, 12].

Sound from each ear creates a cohesive auditory image in the central auditory system of the surroundings in terms of the number, distance, direction, and orientation of sound sources, and the amount of reverberation [3]. Binaural hearing provides perceptual advantages in terms of localization of sound, an increase in loudness, noise suppression effects, and improved speech clarity and sound quality. Binaural hearing enables us to selectively attend to something of specific interest, like a single voice among many talkers [2].

3.1 Localization via binaural and monaural cues

One thing that listening with two ears helps us to do is to determine which direction sound comes from. This is because the sound arriving at each ear will be different in terms of loudness, time of arrival, and spectral shape, and our brains can decode these cues.

Sound originating closer to one ear will reach that ear sooner and with higher intensity than the other more distant ear. Low-frequency sound has long wavelengths that are longer than the curved distance between the two ears and will bend around the head, maintaining the intensity of the sound between the ears. However, sound originating on one side of the head will arrive at the ear on that side before it reaches the ear on the other side. This difference in time of arrival between the ears is called the interaural time difference (ITD) and is the most salient cue for determining whether sound comes from the right or left side.

High-frequency sound has short wavelengths that are shorter than the dimensions of the head resulting in high-frequency sound being diffracted by the head. This diffraction of high-frequency sound results in more intense sound in the ear on the side of the head closest to the sound and an attenuation of sound going around the head to the other ear, with the latter being a phenomenon known as the “head shadow effect”. This difference in intensity of sound between the two ears is called the interaural level difference (ILD) [12]. Cues for ITD are most efficient at frequencies 1500 Hz and below, while cues for ILD are most pronounced above 1500 Hz [13]. The ITD and ILD cues are binaural cues that help with localizing which side a sound is coming from (lateralization).

The main cues for localizing sound in terms of elevation (up-down localization) and in the front or back are provided by diffraction patterns of sound striking the head, pinnae, and upper body [11]. High-frequency sound is boosted by the pinnae, head, and upper body when arriving from the front, and is attenuated when arriving from behind. Although this spectral shaping of sound is a monaural cue, listeners require both monaural and binaural cues to localize correctly [2, 14].

The cues upon which sound localization is based (i.e., ITDs, ILDs, and spectral shaping) also enable spatial hearing, which is the ability to localize and externalize sounds in terms of direction and distance. Spatial hearing provides a broader sense of the environment, thereby helping segregate sounds to choose what to focus on [2, 11, 12].

People with normal hearing uses redundancy in these binaural and monaural cues. When they are in more difficult listening conditions where some of the cues are masked, they can still make use of those that are not. A low-frequency noise “covering up” the ITD cues of a desired sound can for instance easily be compensated by the high-frequency ILD cues. People with hearing loss usually cannot as easily take advantage of this redundancy [12, 15].

3.2 Hearing in noise

When in noise, a person can hear speech more effectively with two ears than with one because of the ability to localize sound, but also because of the head shadow effect, binaural redundancy, and binaural squelch. These binaural cues become advantageous when people leverage them to achieve their listening goals in noise, even though this is somewhat unconscious.

Binaural squelch refers to the auditory system’s ability to employ ITD and ILD cues to spatially separate competing sounds and to attend to the ear with the better SNR [11].

Binaural redundancy is the advantage of receiving the same signal at both ears. When the same signal is received at both ears the treatment of information is more sensitive to small differences in intensity and frequency, and speech recognition is improved in the presence of noise. Binaural redundancy includes binaural loudness summation, where the loudness of a sound is greater if it is heard with two ears as opposed to with only one [11].

Binaural listening can be described in terms of two broad strategies: the “better ear strategy” and the “awareness strategy” [16]. The better ear strategy describes the advantage of one ear having a better SNR than the other ear in noise, due to the diffraction pattern from the head that leads to a different SNR in each ear when the desired signal and noise reach the head from different directions. People using the better ear strategy will position themselves relative to the desired sound to maximize the audibility of that sound, and they will rely on the ear with the best representation or best SNR of that sound [2, 11]. The combined directivity characteristics of the two ears form a perceptually focused beam that can be taken advantage of depending on the desired sound’s location. Therefore, if at least one ear has a favorable SNR, then the auditory system will take advantage of it.

The awareness strategy is an extension of the better ear strategy. This strategy includes the all-around aspects of binaural listening that allow a person to remain connected and aware of the surrounding soundscape when the head shadow effect improves the SNR in one of the two ears. Due to the geometric location of the two ears on the head, the brain can either use the head shadow to enhance the sound of interest or make the head acoustically “disappear” from the sound scene so that the person can attend to sounds all around.

Although they may be experienced to a lesser degree, binaural hearing advantages also exist in the presence of peripheral damage to the auditory system. Bilaterally-fitted hearing aids can maintain and enhance binaural hearing advantages by making auditory cues audible [2, 15]. However, this is provided that the way the sound is collected and processed by the hearing aids gives the appropriate input for the brain to make use of the cues. In other words, hearing aids can support binaural hearing advantages, but they can also interfere with them.

4. How directionality is quantified

Directional microphone systems help address the most fundamental problem of people with hearing loss i.e., understanding speech when there are multiple speakers or when in background noise. These systems provide a directional beam of sounds that are amplified more than sounds from other directions, thereby providing a better SNR compared to when the hearing aid amplifies sounds from all directions equally. As a prerequisite to understanding the operating principle of directional microphone systems and the factors that affect the performance, it makes sense to first discuss how performance is measured and represented.

How well a directional microphone system amplifies sounds from one direction while attenuating sounds from other directions can be quantified electro-acoustically [17] and is usually illustrated in a polar pattern. Polar patterns are displayed on a circular graph, displaying the entire 360° sensitivity response of the system, with 0° marking the frontward-facing direction. When referring to a directional microphone system’s pickup pattern, sound not coming from directly in front of the microphone (0°) is referred to as off-axis. Greater attenuation equates to less sound being picked up by the directional microphone system. An omnidirectional (non-directional) microphone’s polar pattern is equally sensitive to sounds from all directions and has the shape of a circle [2]. Directional microphone systems can provide an indefinite number of different polar patterns. The polar pattern can be unchangeable (“fixed”) and/or flexible and adapting between multiple polar patterns depending on the listening environment. Furthermore, different polar patterns will be evident for different frequencies for any given directional microphone system. Figure 1 shows the most prototypical polar patterns starting from the left with an omnidirectional polar pattern with a bold black line indicating a polar pattern that is equally sensitive to sounds from all directions (360°), followed by a cardioid polar pattern with the bold black line indicating a polar pattern showing excellent sensitivity to the front (0°), reduced sensitivity to the sides (90° and 270°), and minimal sensitivity to the rear (180°). Next is a super-cardioid polar pattern with the black line indicating a polar pattern that shows excellent sensitivity to the front (0°), reduced sensitivity to the sides (90° and 270°) and some sensitivity to the rear (180°). The fourth plot from the left shows a hyper-cardioid polar pattern with the black line indicating a polar pattern that is very much like the hyper-cardioid, but with less sensitivity to the rear (180°). The last illustration shows a bi-directional polar pattern with the black line illustrating a polar pattern that has the same sensitivity to the front (0°) as to the rear (180°) with reduced sensitivity to either side.

Figure 1.

The figure is showing the following polar patterns from the left: Omnidirectional-, cardioid-, super-cardioid-, hyper-cardioid-, and a bi-directional polar pattern (in dB).

The polar pattern of a directional microphone system can be measured either in free space or in/on the ear of a person. When measured in free space, the polar patterns look like those shown in Figure 1. The head and pinna affect the intensity of sound reaching the ear canal as described above. The acoustic effects of the head shadow and pinna also affect the intensity of the sound reaching the hearing aid microphones. Therefore, the polar patterns of a directional microphone system as worn on the head will be different than the polar patterns measured in free space. The head and pinna attenuate sounds when the head and pinna are placed between the sound and the directional microphone system (Head shadow effect) and boost the sound when the directional microphone system is next to the head and pinna and close to the sound. The attenuation and boosting effect of the head and pinna increases with frequency [2].

Another way of quantifying directionality is by use of the directivity index (in dB). The directivity index shows a directional microphone system’s sensitivity to sounds from the front compared to its mean sensitivity to sounds from all other directions [17]. The comparison to the mean of sounds from all other directions can be to sounds from all other directions in a two-dimensional (2D) horizontal plane (in a circle) or in a three-dimensional (3D) space (in a sphere) [2]. The directivity index can also be weighted according to a speech importance function called the Articulation Index. This is intended to provide a better prediction of how directivity will influence actual speech recognition in noise performance. The directivity index, with and without the articulation index weighting, has been found to correlate well with speech recognition in noise performance, although the weighting function does not appear to increase the accuracy of predicting perceptual benefit [17].

A directional microphone system’s directionality can also be quantified by its front-to-back ratio. This measure shows the system’s sensitivity to sounds from in front (0°) relative to sounds from directly behind (180°). This measure captures less information about the directional characteristics of the system than polar patterns or directivity index. It is most useful in determining whether a system is directional or not, rather than in describing its characteristics.

Perceptual or behavioral testing is a clinical way to measure directionality, and this is very commonly reported as an outcome in the literature on directional microphone systems. Adaptive tests that determine an SNR at which listeners achieve a certain performance level, such as 50% correct recognition, are the most efficient approach and allow data from groups of listeners to be easily combined.

5. Directional microphone systems

Directional hearing aids appeared on the market in 1971 [17]. Those devices were equipped with a directional microphone, which is a single microphone with a directional polar pattern. Hearing aid directionality has evolved since and directionality is today offered via two-microphone and four-microphone arrays. Various terms are used to describe these systems. For simplicity, they are often referred to as “directional microphones” even though the systems are built from multiple omnidirectional microphones.

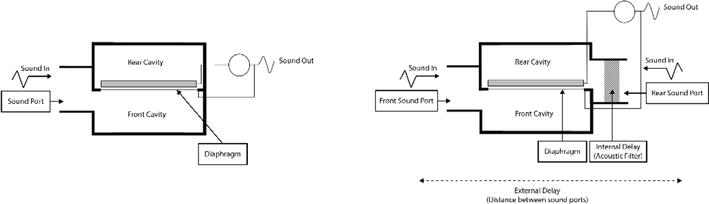

The basic principles of how an omnidirectional and directional microphone work will be explained before discussing today’s directional microphone systems, as today’s technologies are based on the same principles. In an omnidirectional microphone, sound waves enter through a single sound port and reach one side of a very thin and flexible diaphragm within the microphone as shown in Figure 2(left panel). The sound waves cause the diaphragm to deflect and, by different means depending on the microphone type, the deflections are converted into an analogous electrical signal. An omnidirectional microphone is, as previously mentioned, equally sensitive to sounds from all directions when in a free space i.e., when not worn on the head by a hearing aid user.

Figure 2.

Left: Illustration of an omnidirectional microphone. Right: Illustration of a directional microphone.

A microphone can be made directional (more sensitive to sounds from one direction than from other directions) by having two sound ports, a front and a rear sound port, that feed sounds to each their side of the diaphragm as shown in Figure 2(right panel). Since it is assumed that hearing aid users will be facing the sound they want to hear, directional microphones are positioned in the hearing aid to be most sensitive to sound coming from the front. Sound arising from the rear will enter the rear sound port first, and the front sound port shortly after. The time it takes sound to travel between the two sound ports is called the “external delay”. An acoustic filter added to the microphone design delays the sound entering the rear sound port, this is called the “internal delay”. Depending on the wavelength of the sound, and the internal and external delays, the sound striking the diaphragm from each side will either add or subtract. If the sound subtracts, the deflection of the diaphragm will be smaller or perhaps it will not deflect at all, and a less intense signal or no signal will be transduced into an electrical signal. Acoustic filters can be chosen that optimize subtraction—or phase cancelation—of sound arriving from the rear, resulting in different polar patterns. Both delays are fixed in a directional microphone. The external delay determined by the sound port spacing is fixed in the physical design. The internal delay is determined by a physical acoustic filter with a specific delay. A directional microphone can consequently only provide one unchangeable directional polar pattern i.e., directionality is fixed.

5.1 Directional microphone arrays

Today, directionality in hearing aids is offered via microphone arrays, also called beamformers, that can be split into three categories as described below. Pertaining to directionality, the term beamformer is used to indicate that the microphone system in the hearing aid is directional. A beamformer is a type of microphone array used in hearing aids in which directional sensitivity is increased significantly in one direction, and reduced in all other directions, hence forming a “beam” in the direction of the greatest sensitivity [12, 18]. The following microphone array terminology will be used throughout this text for convenience:

Two-microphone arrays: Consisting of two omnidirectional microphones located within the body of one hearing aid. Also referred to as “dual or twin microphone systems”, unilateral beamformers, and first-order subtractive directional microphones [2, 19, 20].

Four-microphone arrays: Consisting of the output from all four microphones of a bilaterally-fitted pair of hearing aids, each containing two microphones. Also referred to as bilateral beamformers [2, 19, 20]. This type of system requires a wired or wireless connection between the hearing aids to allow exchange of the audio signals at the two ears.

Multi-microphone arrays: Consisting of three or more microphones on one device or multiple microphones placed on optical glasses. Also referred to as second-order directional microphones [2, 19, 20, 21]. Multi-microphone arrays are not currently used in hearing aids and will for this reason not be discussed further here.

5.1.1 Two-microphone arrays (unilateral beamformers)

The most common method of providing directionality in hearing aids is to use two omnidirectional microphones located within the body of the hearing aid. These two microphones are commonly referred to as the front and rear microphone and they serve an analogous function to the front and rear sound ports of a traditional directional microphone. When in a frontward-facing directional polar pattern, the sound from the rear microphone is subtracted from the front microphone (e.g., out of phase) to pick up sound from the front of the hearing aid user while attenuating sound from the rear. The areas of greatest sound attenuation are often referred to as “nulls”. As with a traditional directional microphone, the polar pattern of the two-microphone array is determined by the ratio between the external delay and the internal delay. The external delay is the port spacing between the two microphones, while the internal delay is carried out in the digital signal processing [19]. The delay ratio defines how directional the two-microphone array will be, whether it will be a cardioid or a hyper-cardioid pattern for instance [2]. The external delay is fixed as it is dictated by the distance between the two microphones and cannot be adjusted in real time. The internal digital delay can be adjusted to provide different polar patterns. The polar patten of two-microphone arrays are in other words flexible.

5.1.2 Four-microphone arrays (bilateral beamformers)

Many hearing aids can exchange data and stream audio between a bilaterally-fitted pair of hearing aids. This has led to expanding the types of microphone arrays available in hearing aids. One such array is a four-microphone array, which utilizes all four microphones available on a bilaterally-fitted pair of hearing aids. The two hearing aids exchange audio information, which allows summing and/or subtraction of the audio signals between both hearing aids before combining the sound into one monaural output. The monaural output is then delivered to both hearing aids. Compared to a pair of hearing aids using two-microphone arrays independently, a four-microphone array can thus provide an even narrower polar pattern (beam).

Numerous methods for processing in four-microphone arrays exist and have been discussed in the literature [2, 19, 20, 22]. The most basic method is similar to two-microphone arrays, with the output of each independent two-microphone system that is treated similarly to the output from one microphone in a two-microphone array. By manipulating the internal delays in this system, the direction of maximum sensitivity is determined, along with potential frequency dependent and adaptive behavior.

5.1.3 Fixed and adaptive arrays

Two-microphone and four-microphone arrays can either duplicate the performance of a traditional directional microphone and provide one directional polar pattern only i.e., fixed directionality and/or provide flexible directional polar patterns that adapt with the acoustical environment i.e., adaptive directionality. The adaptation is based on parameters within the hearing aid system from the environmental analysis and classification [23]. The adaptive behavior of the system is intended to account for noise backgrounds that consist of noise sources that are not diffuse and/or not stationary. The adaptation will direct the nulls to the specific noise sources within the limitations of the system. A further distinction is that a given system may be broadband adaptive or show frequency-specific behavior. A broadband adaptive system can be effective in canceling a moving noise source. For example, such a system could track and reduce the sound of a car passing from right to left behind a hearing aid user. In a frequency-specific system, different sets of delays apply depending on frequency; if background noises have different frequency content and are spatially separated, they can be effectively canceled simultaneously. The benefit of the frequency specificity is that various kinds of both stationary and moving noises can each potentially be reduced maximally. For example, if the hearing aid user was in a café with a coffee grinding machine running off to the left, a higher pitched sound of a milk steamer directly behind, and a phone ringing off to the right, a frequency-specific system could cancel all of these noises simultaneously.

5.1.4 Common solutions to drawbacks in two-microphone and four-microphone arrays

All directionality, from the basic, fixed directional microphone to those with multiple arrays will feature a loss in low-frequency amplification. Low-frequency sounds have long wavelengths relative to the spacing between each microphone, resulting in similar phase alignment of sound regardless of direction of arrival. This results in greater subtraction of low-frequency sounds coming from any direction. This effect results in a predictable roll-off in the low frequencies of 6 dB/octave [24]. The loss in low-frequency amplification has typically been managed by providing additional low-frequency amplification to the directional microphone output. While this method provides make up gain in the low frequencies, it can also amplify internal noise to a degree that becomes bothersome for the hearing aid user in quiet environments [2]. A different solution to this issue is to provide band-split directionality. Band-split directionality is achieved by filtering the audio coming from the front of both microphones on each hearing aid into separate high-frequency and low-frequency channels; these separate frequency channels are then fed through individual delays. This allows the hearing aid to provide different delay times, and thus different polar patterns, to each frequency channel. Through band-split directionality, the hearing aid can provide an omnidirectional polar pattern to the low frequencies, while maintaining directivity in the high frequencies. Although this does not provide any directional attenuation of sounds that are considered noise in the low frequencies, it has the added effect of simulating the natural directivity of normal hearing [2]. It also has the advantage of preserving ITDs, which is an important binaural hearing cue. And it maintains similar sound quality to a full-band omnidirectional response, which makes it possible for automatic switching of microphone modes without the hearing aid user noticing any distracting change in the sound. Band-split directionality bypasses the issue of increased internal noise from the increased low-frequency gain that is found in hearing aids applying directionality to the low frequencies.

Behind-the-ear (BTE) and receiver-in-the-ear (RIE) hearing aid styles have a disadvantageous microphone location above and behind the pinna compromising spectral cues in these hearing aid styles. Many two-microphone and four-microphone arrays use a pinna restoration response to compensate for this. Pinna restoration mimics the natural front-facing directionality of an average pinna in the higher frequencies to compensate for the disadvantageous microphone location [18]. A pinna compensation algorithm has been shown to effectively reduce the number of front/back confusions compared with traditional omnidirectional microphones [25, 26]. In a review of studies on pinna restoration, Xu and Han suggested that individual differences in real-world performance with pinna restoration indicate that some hearing aid users may experience a localization benefit relative to omnidirectionality while others may not [27].

5.1.5 Applying directionality in daily life

Directionality has many benefits for hearing aid user, but it is not appropriate for all listening situations, and therefore full-time use of directionality is not recommended [17, 28]. It has been reported that hearing aid users prefer an omnidirectional setting in quiet environments, and while directionality is helpful in noise, it is most beneficial when sounds of interest are in front of the hearing aid user and spatially separated from the unwanted noise, which is not always the case [17, 29]. The dilemma of the capability of directionality to provide speech recognition in noise benefit, but with numerous potential drawbacks, contributed to limited fitting of directional microphones in hearing aids until the mid-1990s. Another factor was that directionality was incompatible with the small styles of in-the-ear (ITE) hearing aids that were most popular in the 1980s and 1990s. Today, most styles of hearing aids can and do house directional microphone systems, and flexibility in programming of hearing aids and in user control of hearing aid parameters provide many options for the hearing aid user to access directional benefits.

The simplest option from a technical point-of-view is to give the hearing aid user manual control of the directional setting in their hearing aids. In this case, the hearing aids have two or more settings where at least one contains directionality, and the hearing aid user can select the desired setting via a button on the hearing aid, a remote control, or a smartphone app. This solution requires the hearing aid user to recognize when directionality might be beneficial, activate the appropriate setting, and manage various aspects of their listening environment to maximize benefit. This could include, for example, asking their conversational partner to sit or stand in a well-lit area facing the unwanted noise sources so that the hearing aid user can face their partner with their back to the noise. The hearing aid user must also remember to deactivate the directional setting when it is no longer relevant.

Another option is for directionality to be activated automatically in listening conditions where it might be of benefit. This requires that the hearing aid has a way of identifying relevant acoustic environments as well as a strategy for when and how to apply the directional settings. A more detailed discussion of this concept follows.

5.1.6 Environmental analysis and classification

Hearing aids have automatic functionality that allows for changing of directional mode based on the environment. Prior to discussing the automatic aspects of directionality, it is prudent to summarize how hearing aids analyze and categorize different environments. Hearing aids determine which type of acoustic environment they are in through environmental classification algorithms, or more simply environmental classifiers.

While it is difficult to discuss specifics of environmental classifiers due to proprietary aspects of hearing aid algorithms, similarities in environmental classifiers exist across manufacturers [23]. Environmental classifiers identify pre-determined acoustic environments (e.g., quiet, noise, speech and noise, etc.) and trigger automatic changes in hearing aid settings and features in order to adjust to a given environment. Environmental classifiers can drive automatic changes in hearing aid features such as noise reduction, wind noise reduction, and directionality. Because of their role in automatic changes in hearing aids, environmental classifiers are one of the most important components of modern adaptive hearing aids.

Environmental classifiers determine environments through analyzing the acoustic features of sound, such as level or intensity, spectral shape, modulation depth and rate of the sound in the environment [2]. Directional microphone systems can also be part of the environmental analysis, as the output of the system can be used to detect the direction of arrival of speech as well as estimating the sound level coming from the front or back hemisphere. The hearing aid continually analyzes the environment and updates the classification as the environment changes or the hearing aid user moves to a new environment (e.g., from noise to quiet).

5.1.7 Automatic control of directionality

Regardless of the specific directionality, i.e., fixed or adaptive, two- or four-microphone arrays, most modern hearing aids include a steering algorithm that switches among two or more microphone modes based on environmental analysis and classification. The main purpose of an automatic steering algorithm is to make wearing the hearing aids easier for the hearing aid user, and to maximize opportunities for benefit of directionality. At a minimum, such algorithms include omnidirectional on both ears and directional on both ears when two hearing aids are fitted. Some of these algorithms work independently per device (and therefore also work on unilaterally-fitted hearing aids), while others rely on exchange of data between a pair of bilaterally-fitted hearing aids to determine the optimum microphone mode for the environment. Automatic switching of microphone modes is most commonly designed to make gradual changes even when the environment changes quickly, as hearing aid users are bothered by sudden and noticeable changes in the state of their hearing aids. This makes the hearing aids pleasant to wear but could theoretically reduce directional benefit momentarily.

The automatic control feature in a hearing aid is based on an audiological rationale, and differences in rationales result in different automatic switching behavior. A rationale for applying directionality can be very simple and trigger straightforward behavior, or it can be quite complex. The rationale is currently implemented using reactive Artificial Intelligence (AI) where an algorithm is programmed to make task specific decisions about how to apply directionality and sometimes other features such as gain and noise reduction settings. As technology advances, it is expected that AI controlled directionality and other noise reducing features will incorporate input from sensors as well as the hearing aid microphones, and that these algorithms will learn from past experience [30, 31]. Table 1 summarizes some examples of rationales and system behavior for automatically applied directionality in order of increasing complexity. The most complex strategy is based on a binaural hearing model and combines the strengths of directionality in improving SNR and compensating for lost pinna-related cues with the binaural hearing abilities of the hearing aid user [18]. The microphone modes that are applied support listening strategies discussed earlier such as the better ear strategy and the awareness strategy.

| Rationale | System behavior | Potential disadvantage |

|---|---|---|

| Directionality is needed when noise is present because it will improve SNR for speech in front and reduce annoyance of noise even if speech is not present | Above a certain input level, directionality is applied; below that level, omnidirectionality is applied | Does not account for listening goals of the hearing aid user. Lack of audibility for important sounds in the rear; sound quality disadvantages; wind noise |

| Directionality is needed when noise and speech are both present because benefit will be most likely if there is a known signal of interest. | If speech is detected and the input exceeds a certain level, directionality is applied; otherwise, omnidirectionality is applied | Same as above |

| Directionality is needed when speech is present in front of the hearing aid user and there is also noise present; the greater specificity of listening condition will maximize benefit | If speech is detected in front of the hearing aid user and the input level exceeds a certain level, directionality is applied; otherwise omnidirectionality is applied | Same as above |

| The brain requires specific information to support natural ways that people listen in different types of environments. People rely on different listening strategies that are dependent on the acoustic environment including spatial hearing cues, improving SNR, and maintaining awareness of surroundings. | Depending on presence and direction of arrival of speech, presence of noise and overall input levels, one of multiple microphone modes are applied. The specific mode will either preserve cues for spatial hearing and sound quality, balance improved SNR with access to surroundings, or maximize SNR | Slow mode switching behavior could potentially cause momentary reduced access to important off-axis sounds |

Table 1.

6. Clinical implications

6.1 Benefit

The benefit of directionality in hearing aids is well established. Directionality is the only hearing aid technology that has been shown to improve SNR in a way that significantly improves speech recognition in noisy situations where hearing aid users are listening to speech coming from in front of them with competing sound from other directions [9, 32]. This has been observed with two-microphone arrays [20, 32, 33, 34, 35, 36] and more recently, with four-microphone arrays. In addition, four-microphone arrays in beamforming mode have shown benefit over omnidirectional conditions in terms of rejecting stimulus from the side [20, 36, 37, 38, 39, 40].

Research has also shown some increased benefit using four-microphone arrays in beamforming mode compared to two-microphone arrays in beamforming mode. Picou and Ricketts [20] investigated two-microphone and four-microphone arrays in beamforming mode as compared to omnidirectional mode in a laboratory environment. The study investigated sentence recognition along with subjective responses (e.g., work, desire to control, willingness to give up, and tiredness). Results indicated both two-microphone arrays in beamforming mode and four-microphone arrays in beamforming mode significantly outperformed the omnidirectional mode in terms of sentence recognition, and there was a small, but significant improvement noted in the four-microphone array in beamforming mode over the two-microphone array in beamforming mode. Additionally, subjective benefit was noted regarding tiredness and desire to give up in the four-microphone array in beamformer mode over the two-microphone array in beamformer mode [20].

It is important to note that testing within a laboratory setting, despite the best of efforts, is not equivalent to real-world environments. Laboratory settings are frequently set up with statically-positioned signal and noise stimuli, as opposed to real-world environments with signals and noises that move. Additionally, the varying acoustic environments and reverberations experienced in the real world are difficult to faithfully reproduce in a simulated laboratory environment. It has been found that, when real-world environments mimic those of a laboratory setting, directional microphone systems can provide similar real-world benefit; however, hearing aid users do not always find themselves in laboratory-like settings [2, 41]. Despite this, we still see benefits from directionality in hearing aids as reported by hearing aid use in real-world environments.

Hearing aid users spend time in a variety of listening environments, not all of which are optimal for directional microphone systems. Previous studies have shown that listeners encounter environments that can benefit from directional microphone systems (e.g., listener in the front with noise to the sides and rear) only about one-third of the time [2, 42, 43]. This variation in listening environments is a strong argument for including automatic control of directionality in hearing aids. Automatic control of directionality provides benefit to hearing aid users in speech in noise environments, without need for manual control from the hearing aid user [18, 42]. Automatic control of directionality can also be beneficial when a talker of interest is from a direction other than the front of the hearing aid user. This benefit has been observed with the use of a specific automatic four-microphone array that switches between omnidirectional and three different directional modes based on the listening environment. The four different modes are omnidirectional/omnidirectional (both hearing aids of a pair in omnidirectional mode), four-microphone array in beamformer mode, and two asymmetric modes in which one hearing aid is in an omnidirectional mode and the other is in a directional mode [44]. The asymmetric directionality modes help to compensate for hearing aid users’ environments in which the target signal is not directly from the front. When speech is detected from one side, the hearing aid on that side switches to an omnidirectional mode to allow access to the speech signal. Research involving asymmetric directionality has been shown to improve speech recognition for off-axis sounds compared to two-microphone and four-microphone arrays in beamformer mode [41, 45]. Note that this asymmetric directional rationale helps mimic the natural benefit of better ear listening used by people with normal hearing.

6.2 Directional limitations and considerations

There are multiple factors influencing the effectiveness of directional microphone systems, and it is important for both the hearing aid user and the hearing aid fitter to be knowledgeable about these factors to be able to optimize the effectiveness, and thereby benefit, of directionality. Factors that affect a directional microphone system’s effectiveness include implementation, listening environment, candidacy, hearing aid factors and knowledge about when not to use directional microphone systems.

6.2.1 Implementation of directionality

Implementation of microphone arrays in hearing aids is not without inherent drawbacks, the first of which is size. As mentioned previously, physical separation of microphones is a component of creating directionality in hearing aids. If the spacing between the two microphones is too small, sensitivity is reduced, because the phase difference between the signal hitting the front microphone and the rear microphone is reduced. The reduced phase difference results in less subtraction between the two microphones, and thus less directional sensitivity [2]. As low frequencies have longer wavelengths, this reduction in sensitivity due to small spacing between the microphones mostly impacts low frequencies [2, 24]. Conversely, too large of a spacing can result in a reduction of frontal sensitivity to high frequencies. When a frequency is so high such that the distance between the microphones is half its wavelength, a reduction in high frequency directional response can occur [2]. Since there are consequences of too small or too large of a microphone spacing in hearing aids, it has been reported that a microphone spacing of between 5 and 12 mm is appropriate and commonly used for hearing aids [2, 24, 46].

Given the importance of spacing between the microphones, a hearing aid must physically be able to provide adequate microphone spacing for directionality. Thus, hearing aid style often determines whether directionality is present. It is standard to find directionality in BTE, RIE, ITE and larger in-the-canal (ITC) hearing aids. While the completely-in-the-canal (CIC) and invisible-in-the-canal (IIC) hearing aid styles do not have sufficient room on the faceplate of the hearing aid for two microphones [2], it is possible in smaller styles to use the omnidirectional microphone on each hearing aid as a two-microphone array. An issue with this is that both binaural and monaural spectral cues that are a natural acoustic advantage for these hearing aid styles are removed or disrupted, thereby diminishing the potential benefit of the directional microphone system.

Although, lack of directionality on custom devices may seem like an immediate disadvantage, it is important to consider the natural high-frequency directionality provided by pinna cues. By placing a hearing aid microphone further into the ear canal, as is the case in custom devices, the pinna shapes the sound before it reaches the hearing aid microphone, allowing an omnidirectional mode to be shaped by the natural directionality produced by the pinna. This is most noticeable on CIC and IIC hearing aids, but even ITE hearing aids with narrow microphone spacing can receive some of these benefits [47, 48]. A greater directivity index is noted as insertion depth of the hearing aid is increased [2, 24, 48].

As discussed previously, hearing aids that sit above the ear, such as BTE and RIE hearing aids, must compensate for the lack of pinna cues due to the microphone location above the ear. Proximity of the microphones to external ear anatomy, such as the helix, can obscure microphones and impact sound entering the microphones, and thus directionality [49]. Due to these factors, it is important to ensure the BTE and RIE hearing aids are positioned in such a way that they are placed as far forward on the pinna as is comfortable to reduce any positioning impacts on directionality.

As a final note regarding implementation of four-microphone arrays, they have the additional requirement for wireless functionality, so size is also a consideration, because the hearing aid must be large enough to fit the wireless antennae and batteries that can provide enough power for the wireless transmissions. As such, microphone arrays are typically found in BTE, RIE, and larger custom hearing aids.

6.2.2 Listening environment

A limitation of directional microphone systems is that the SNR benefit is realized only when certain conditions are met. First, the sounds of interest must be spatially separated from the sounds that are not of interest (“noise”) [2]. Effectively, this means that noise cannot be directly next to or behind the sounds of interest, as the noise will be amplified alongside the sounds of interest.

Second, the sounds of interest must be located within the directional beam [2]. If the sounds of interest are located outside the directional polar pattern of the directional microphone system, the signal of interest will be attenuated.

Third, the sounds of interest should be within “critical distance” of the hearing aid user. The critical distance is the distance at which the sound pressure level of a sound is composed of equal parts direct sound and reverberant sound [50]. Reverberant sound, or reverberations, occurs due to the result of a sound reflecting off surfaces in the environment. Reverberations in the environment cause reflections to hit both microphones at the same time, thereby reducing the phase differences that create the intended directional response. Thus, the ability of the hearing aid to cancel the unwanted reverberant sounds are reduced [2]. Critical distance varies depending on the listening environment, and can range from 2 to 3 meters in an environment with minimal reverberation and less than 1 meter in a highly reverberant environment [50, 51]. It should be noted that sounds that fall outside of critical distance are not immediately unavailable to the hearing aid user, but rather there is a gradual reduction in benefit as sound sources cross and move further outside critical distance [2].

While hearing aid directionality provides benefits in certain environments, hearing aid users may not always find it beneficial if sounds of interest are coming from directions other than the front. As mentioned, using a hearing aid with automatic control of adaptive directionality, or providing the hearing aid user the ability to switch between settings, can provide flexibility to the user, based on their own experiences and listening environments. Thus, it is important to take hearing aid users’ needs into consideration when fitting hearing aids with directionality.

6.2.3 Candidacy

All hearing aid users can benefit from directional microphone systems. With few exceptions, directional benefit does not depend on hearing loss [2, 9]. However, a person with more hearing loss, and thus SNR loss, will be more dependent on a directional microphone system than a person with less or no hearing loss [2]. Directional microphone systems cannot completely compensate for the suprathreshold auditory processing problems of some people experiencing poor aided speech recognition, such as occurs with retro-cochlear disorders of the auditory nervous system. Similarly, directional microphone systems cannot compensate in cases in which there is unaidable hearing at certain frequencies due to the extent of the SNHL [2]. While directionality can still provide the best possible SNR for these hearing aid users, it is important to establish expectations regarding the benefit of directionality and hearing aid technology.

Another aspect of candidacy to consider regarding directional benefit is whether the person with hearing loss is a candidate for open fit hearing aids. Open fit hearing aids use coupling to the ear canal (e.g., dome) that allows low-frequency sound to enter and exit the ear canal, which allows for significant venting. This provides a more natural, “open” sound, because the hearing aid user hears the low frequencies unimpeded, while the hearing aid provides amplification for the mid- and high frequencies [2]. Candidates for open fit hearing aids have relatively good low-frequency hearing, with hearing thresholds better than about 40 dB hearing level (HL) in the low frequencies. Open fit hearing aids also reduce the occlusion effect. The occlusion effect is the buildup of low-frequency sound pressure levels (SPL) in an occluded ear canal. Hearing aid user generated sounds, such as own voice, chewing, swallowing, breathing, and coughing, are conducted via the body to the ear canal [52, 53]. As an open fit does not result in the buildup of low-frequency energy, the occlusion effect is notably reduced.

However, this venting provided in open fittings has a negative impact on overall directional benefit in the low frequencies. It is only possible to provide improved SNR, and thereby a directional benefit, for frequencies where the hearing aid output is greater than the vent output [2]. Since in open fittings there is minimal low-frequency amplification, and what low-frequency amplification is provided will likely leak out of the vent, it is not possible to achieve hearing aid output greater than vent output in this frequency range. In addition, open fittings reduce the benefit of directionality because ambient noise can enter the ear canal [2, 54, 55]. These factors mean that open-fit hearing aid users will only receive directional benefit in the mid- and high frequencies [2].

Despite the drawbacks open fittings have on directional benefit, it is once again important to think about the natural directional response pattern of the ear. Recall that the open ear has a more omnidirectional response in the low frequencies compared to the high frequencies [2, 56]. Because of this, the lack of directional benefit in the lows, an open fitting with directionality can still be seen as a solution that replicates the natural directionality pattern of the open ear.

6.2.4 Hearing aid user factors

Directionality in hearing aids can provide significant benefit to hearing aid users, but as with any hearing aid technology, the hearing aid users, their own listening scenarios, and their own unique preferences must be considered. It has been shown that more than 30% of adults’ active listening time is spent attending to sounds that are not in front, where there are multiple target sounds, where the sounds are moving, or any combination of these—situations where front-facing directionality can interfere with audibility of the sound of interest [2, 42, 43, 45].

Furthermore, vast assumptions are made by the hearing aid about noise and the interfering signal. While noise is assumed to be “unwanted sound,” is it appropriate for hearing aids to always make assumptions on what sounds are “unwanted”? Consider that the sound of interest for a hearing aid user may not always be speech, and this can vary based on the listening environment for the same general situation e.g., in a busy airport terminal, a hearing aid user may want to hear the speech of a travel companion walking next to her/him but must also hear the announcements coming from behind.

All the above complicates a “one-size fits all” directionality, even with automatic adaptive directional microphone systems. Understanding an individual’s use case scenarios can help inform the hearing aid fitting. While automatically activated adaptive directionality may be beneficial for everyday use, situations in which a fixed directional or omnidirectional mode in a hearing aid program may be beneficial may also occur. An omnidirectional program could be useful for a teacher monitoring a crowded lunchroom or a jogger who wants to be aware of their environments and traffic while jogging on a city sidewalk. Modern hearing aids allow for multiple programs, so it can be that multiple programs are set up for each hearing aid user to meet their needs, which they can then cycle through as needed.

Understanding each hearing aid user’s needs is critical to ensuring that the hearing aids are programmed to provide optimal benefit in all of the user’s daily environments. Additionally, it is important to provide education through counseling so that each hearing aid user knows when and how to implement program changes in their hearing aids, as well as when and how to make changes to their environments to achieve maximum directional benefit [45].

Hearing aid users must also encounter enough situations where directionality is potentially beneficial to benefit from directionality.

6.2.5 Disadvantages

Disadvantages previously discussed include reduced amplification of off-axis sounds, and reduction in the low frequencies. In environments where the signal of interest is off axis, the listener may be required to turn their head towards the signal of interest. Compensation of low-frequency loss by gain, implementation of band-split directionality, and open fitting in which no low or minimal frequency gain is applied are methods of overcoming the inherent reduction in low frequencies observed in directional microphone systems.

In addition to the above, directional microphone systems can also reduce left/right localization ability and be more susceptible to wind noise [2]. Reduced localization is most prevalent in a bilateral pair of hearing aids that do not coordinate gain settings between the ears. Modern hearing aids that synchronize both ears do not experience such reduction in localization, as they help maintain the interaural differences important for localization [2]. Furthermore, complex multiband directionality schemes can help preserve localization and provide directionality such as in band-split directionality. One example includes a hearing aid that provides omnidirectionality below an adjustable low-frequency cut-off, a monaural hyper-cardioid directionality in each hearing aid above 5000 Hz, and four-microphone array beamformer mode between the low-frequency cut-off and 5000 Hz [45, 57]. This strategy preserves the ITDs, which are the dominant cue for localization in the low frequencies, and ILDs which for sounds above 5000 Hz, help maintain high frequency localization. Lastly, the frequencies used for the beamformer mode are within the frequencies most important for speech recognition [45].

While all hearing aid microphones are susceptible to wind noise, directional microphone systems are most impacted. Wind noise occurs when wind pass over the head or pinna and creates turbulence. The turbulence creates areas of high and low pressure, which is picked up by the microphones and converted to an audible, amplified sound [58]. Wind noise is characterized by a loud low- to mid- frequency sound, depending on the speed of the wind [2, 58]. The turbulence caused by wind is unique and if the noise is uncorrelated at each microphone in a two-microphone directional system, it is not canceled out like environmental sound [58]. Instead the uncorrelated wind noise is added between the two microphones and amplified [58].

BTE and RIE hearing aids, with microphones located on the top of the ear, and larger ITE hearing aids with microphones not shielded from wind by the pinna, are especially prone to wind noise; note that these are also the hearing aids most typically used with directionality. In these hearing aids, wind noise reduction algorithms are typically used to detect and reduce wind noise. Although the exact implementation of wind noise varies between hearing aid designers, it generally includes a temporary reduction of the low-frequency gain [58, 59, 60, 61]. Additionally, the hearing aid may switch to an omnidirectional mode that is less susceptible to wind noise or use a band-split response to create an omnidirectional pattern in the low frequencies and directional pattern in the high frequencies [58, 60, 62]. As stressed in the previous section pertaining to hearing aid user preference, it is important to understand a hearing aid user’s needs and preference. A hearing aid user who is frequently in a windy environment (e.g., outside, on a boat, on bicycle, etc.) may require a dedicated program in which directionality is turned off.

All these factors influencing the potential benefit of directional microphone systems are summarized in Table 2.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Table 2.

Factors influencing the effectiveness of directional microphone systems.

7. Conclusions

People with SNHL have difficulties hearing in noise. The only hearing aid technology—in addition to amplification—proven to help hearing aid users hear better in noise is directionality, and nearly all hearing aid users can benefit from directionality [2, 17]. Modern enhancements in wireless technology have expanded the types of microphone arrays from simple two-microphone arrays on single hearing aids to four-microphone arrays in bilaterally-fitted hearing aids. Microphone arrays play an important role in improving hearing in noise for hearing aid users. Furthermore, flexibility of adaptive directionality in hearing aids, driven by environmental analysis and classification, in combination with a steering algorithm provides a customizable experience capable of meeting hearing aid users’ needs to hear in multiple encountered acoustic environments.

References

- 1.

Picou EM. MarkeTrak 10 (MT10) survey results demonstrate high satisfaction with and benefits from hearing aids. Seminars in Hearing. 2020; 41 (1):21-36 - 2.

Dillon H. Hearing Aids. 2nd ed. Sydney: Boomerang Press; 2012 - 3.

Moore BC. Perceptual Consequences of Cochlear Damage. Oxford: Oxford University Press; 1995 - 4.

Killion MC. I can hear what people say, but I Can’t understand them. Hearing Reveiw. 1997; 4 :8-14 - 5.

Killion MC. The SIN report: Circuits haven't solved the hearing-in-noise problem. The Hearing Journal. 1997; 50 (10):28-30 - 6.

Killion MC. Hearing aids: Past, present, future: Moving toward normal conversations in noise. British Journal of Audiology. 1997; 31 (3):141-148 - 7.

Peters RW, Moore BC, Baer T. Speech reception thresholds in noise with and without spectral and temporal dips for hearing-impaired and normally hearing people. The Journal of the Acoustical Society of America. 1998; 103 (1):577-587 - 8.

Plomp R. A signal-to-noise ratio model for the speech-reception threshold of the hearing impaired. Journal of Speech, Language, and Hearing Research. 1986; 29 (2):146-154 - 9.

Mueller GH, Ricketts TA. Directional-microphone hearing aids: An update. The Hearing Journal. 2000; 53 (5):10-19 - 10.

Bentler RA. Effectiveness of directional microphones and noise reduction schemes in hearing aids: A systematic review of the evidence. Journal of the American Academy of Audiology. 2005; 16 (7):473-484 - 11.

Avan P, Giraudet F, Büki B. Importance of binaural hearing. Audiology & Neurotology. 2015; 20 (1):3-6 - 12.

Derleth P, Georganti E, Latzel M, Courtois G, Hofbauer M, Raether J, et al. Binaural signal processing in hearing aids. Seminars in Hearing. 2021; 42 (3):206-223 - 13.

Moore BC. An Introduction to the Psychology of Hearing. 6th ed. Emerald: Bingley; 2012 - 14.

Musicant AD, Butler RA. Influence of monaural spectral cues on binaural localization. The Journal of the Acoustical Society of America. 1985; 77 (1):202-208 - 15.

Byrne D, Noble W. Optimizing sound localization with hearing aids. Trends in Amplification. 1998; 3 (2):51-73 - 16.

Zurek PM. Binaural advantages and directional effects in speech intelligibility. In: Studebaker GA, Hochberg I, editors. Acoustical Factors Affecting Hearing Aid Performance. Boston: Allyn & Bacon; 1993. pp. 255-275 - 17.

Ricketts TA. Directional hearing aids: Then and now. Journal of Rehabilitation Research and Development. 2005; 42 (4 Suppl. 2):133-144 - 18.

Jespersen CT, Kirkwood BC, Groth J. Increasing the effectiveness of hearing aid directional microphones. Seminars in Hearing. 2021; 42 (3):224-236 - 19.

Kates JM. Digital Hearing Aids. 2nd ed. San Diego: Plural Publishing; 2008 - 20.

Picou EM, Ricketts TA. An evaluation of hearing aid beamforming microphone arrays in a Noisy laboratory setting. Journal of the American Academy of Audiology. 2019; 30 (2):131-144 - 21.

Mens LH. Speech understanding in noise with an eyeglass hearing aid: Asymmetric fitting and the head shadow benefit of anterior microphones. International Journal of Audiology. 2011; 50 (1):27-33 - 22.

Griffiths L, Jim C. An alternative approach to linearly constrained adaptive beamforming. IEEE Transactions on Antennas and Propagation. 1982; 30 (1):27-34 - 23.

Hayes D. Environmental classification in hearing aids. Seminars in Hearing. 2021; 42 (3):186-205 - 24.

Ricketts TA. Directional hearing aids. Trends in Amplification. 2001; 5 (4):139-176 - 25.

Groth J. Binaural Directionality II with Spatial Sense. Report No.: M201175-GB-14.12-Rev.A. Ballerup, Denmark: ReSound; 2015. 9 p - 26.

Jespersen CT, Kirkwood B, Schindwolf I. M&RIE Receiver Preferred for Sound Quality and Localisation. Report No.: M201502GB-20.07-Rev.A. Ballerup, Denmark: ReSound; 2020. 8 p - 27.

Xu J, Han W. Improvement of adult BTE hearing aid Wearers' front/Back localization performance using digital pinna-Cue preserving technologies: An evidence-based review. Korean Journal of Audiology. 2014; 18 (3):97-104 - 28.

Soede W, Berkhout AJ, Bilsen FA. Development of a directional hearing instrument based on array technology. The Journal of the Acoustical Society of America. 1993; 94 (2):785-798 - 29.

Soede W, Bilsen FA, Berkhout AJ. Assessment of a directional microphone array for hearing-impaired listeners. The Journal of the Acoustical Society of America. 1993; 94 (2):799-808 - 30.

Branda E, Wurzbacher T. Motion sensors in automatic steering of hearing aids. Seminars in Hearing. 2021; 42 (3):237-247 - 31.

Gusó E, Luberadzka J, Baig M, Sayin Saraç U, Serra X. An objective evaluation of hearing aids and DNN-based speech enhancement in complex acoustic scenes. In: AA. VV. IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA 2023). 1 ed. New Paltz, New York: IEEE; 2023. pp. 1-5 - 32.

Bentler R, Palmer C, Mueller GH. Evaluation of a second-order directional microphone hearing aid: I. Speech perception outcomes. Journal of the American Academy of Audiology. 2006; 17 (3):179-189 - 33.

Valente M, Fabry D, Potts LG. Recognition of speech in noise with hearing aids using dual microphones. Journal of the American Academy of Audiology. 1995; 6 (6):440-449 - 34.

Preves DA, Sammeth CA, Wynne MK. Field trial evaluations of a switched directional/omnidirectional in-the-ear hearing instrument. Journal of the American Academy of Audiology. 1999; 10 (5):273-284 - 35.

Compton-Conley CL, Neuman AC, Killion MC, Levitt H. Performance of directional microphones for hearing aids: Real-world versus simulation. Journal of the American Academy of Audiology. 2004; 15 (6):440-455 - 36.

Aspell E, Picou E, Ricketts T. Directional benefit is present with audiovisual stimuli: Limiting ceiling effects. Journal of the American Academy of Audiology. 2014; 25 (7):666-675 - 37.

Picou EM, Aspell E, Ricketts TA. Potential benefits and limitations of three types of directional processing in hearing aids. Ear and Hearing. 2014; 35 (3):339-352 - 38.

Groth J. The Evolution of the Resound Binaural Hearing Strategy: All Access Directionality and Ultra Focus. Report No.: M201493GB-20.05-Rev.A. Ballerup, Denmark: ReSound; 2020. 8 p - 39.

Wang L, Best V, Shinn-Cunningham BG. Benefits of beamforming with local spatial-Cue preservation for speech localization and segregation. Trends in Hearing. 2020; 24 :2331216519896908 - 40.

Jespersen CT, Groth J. Enhanced Directional Strategy with New Binaural Beamformer Leads to Vastly Improved Speech Recognition in Noise. Report No.: M201620GB-22.06-Rev.A. Ballerup, Denmark: ReSound; 2022. 6 p - 41.

Cord MT, Walden BE, Surr RK, Dittberner AB. Field evaluation of an asymmetric directional microphone fitting. Journal of the American Academy of Audiology. 2007; 18 (3):245-256 - 42.

Blamey PJ, Fiket HJ, Steele BR. Improving speech intelligibility in background noise with an adaptive directional microphone. Journal of the American Academy of Audiology. 2006; 17 (7):519-530 - 43.

Walden BE, Surr RK, Cord MT, Dyrlund O. Predicting hearing aid microphone preference in everyday listening. Journal of the American Academy of Audiology. 2004; 15 (5):365-396 - 44.

Groth J, Bridges J. An Overview of Directional Options in Resound Hearing Aids. Report No.: M201521GB-21.01-Rev.A. Ballerup, Denmark: ReSound; 2021. 9 p - 45.

Jespersen CT, Kirkwood B, Groth J. Effect of directional strategy on audibility of sounds in the environment for varying hearing loss severity. Canadian Audiologist. 2017; 4 (6):1-11 - 46.

Ludvigsen C, Baekgaard L, Kuk F. Design Considerations in Directional Microphones. Widex Press; 2001. p. 18 - 47.

Ricketts T. Impact of noise source configuration on directional hearing aid benefit and performance. Ear and Hearing. 2000; 21 (3):194-205 - 48.

Fortune TW. Real-ear polar patterns and aided directional sensitivity. Journal of the American Academy of Audiology. 1997; 8 (2):119-131 - 49.

Ricketts T. Directivity quantification in hearing aids: Fitting and measurement effects. Ear and Hearing. 2000; 21 (1):45-58 - 50.

Ricketts TA, Hornsby BWY. Distance and reverberation effects on directional benefit. Ear and Hearing. 2003; 24 (6):472-484 - 51.

Hawkins DB, Yacullo WS. Signal-to-noise ratio advantage of binaural hearing aids and directional microphones under different levels of reverberation. The Journal of Speech and Hearing Disorders. 1984; 49 (3):278-286 - 52.

French-Saint George M, Barr-Hamilton RM. Relief of the occluded ear sensation to improve earmold comfort. Journal of the American Audiology Society. 1978; 4 (1):30-35 - 53.

Zachman TA, Studebaker GA. Investigation of the acoustics of earmold vents. The Journal of the Acoustical Society of America. 1970; 47 (4):1107-1115 - 54.

Bentler R, Wu Y-H, Jeon J. Effectiveness of directional technology in open-canal hearing instruments. The Hearing Journal. 2006; 59 (11):40-47 - 55.

Mueller HG, Ricketts TA. Open-canal fittings: Ten take-home tips. The Hearing Journal. 2006; 59 (11):24-39 - 56.

Christensen LA. The evolution of directionality: Have developments led to greater benefit for hearing aid users? Canadian Audiologist. 2017; 4 (4):1-15 - 57.

Groth J, Laureyns M. Preserving localization in hearing instrument fittings. The Hearing Journal. 2011; 64 (2):34-38 - 58.

Korhonen P. Wind Noise Management in Hearing Aids. Seminars in Hearing. 2021; 42 (3):248-259 - 59.

Bentler R, Chiou L-K. Digital noise reduction: An overview. Trends in Amplification. 2006; 10 (2):67-82 - 60.

Stender T, Hielscher M. Windguard: Bringing Listening Comfort to Windy Conditions. Report No.: M200765-GB-12.05-Rev.A. Ballerup, Denmark: ReSound; 2012. 6 p - 61.

Weile J, Andersen M. Wind Noise Management. Oticon Tech Paper; 2016 - 62.

Kuk F, Keenan D, Lau C-C, Ludvigsen C. Performance of a fully adaptive directional microphone to signals presented from various azimuths. Journal of the American Academy of Audiology. 2005; 16 (6):333-347