Datasets collected by wearable sensors. The sensors used are as follows: wearable (W), object-tagged (O), environmental (E).

Abstract

People around the world are living longer. The question arises of how to help elderly people to live longer independently and feel safe in their homes. Activity of Daily Living (ADL) recognition systems automatically recognize the daily activities of residents in smart homes. Automated monitoring of the daily routine of older individuals, detecting behavior patterns, and identifying deviations can help to identify the need for assistance. Such systems must ensure the confidentiality, privacy, and autonomy of residents. In this chapter, we review research and development in the field of ADL recognition. Breakthrough advancements have been evident in recent years with advances in sensor technology, the Internet of Things (IoT), machine learning, and artificial intelligence. We examine the main steps in the development of an ADL recognition system, introduce metrics for system evaluation, and present the latest trends in knowledge transfer and detection of behavior changes. The literature overview shows that deep learning approaches currently provide promising results. Such systems will soon mature for more diverse practical uses as transfer learning enables their fast deployment in new environments.

Keywords

- digital health

- elderly

- activities of daily living

- recognition of activities

- sensors

- machine learning

1. Introduction

Today, most people can expect to live over 60 years. Projections by the World Health Organization indicate that by 2050, the global population of people aged 60 and older will double. The number of persons aged 80 or older is expected to triple between 2020 and 2050 to reach 426 million. The shift in the distribution of a country’s population toward older ages is known as population aging. Initially, it took place in high-income countries. However, nowadays, the most significant shifts are observed in low- and middle-income countries. Population aging has many consequences, such as increased household costs, strains on public finances and healthcare providers, and decreasing economic growth. It requires transforming healthcare services and demanding new approaches to optimize costs.

Aging is a physiological process that cannot be prevented, but efforts can be made to improve its quality. The World Health Organization defines digital health as the field of knowledge and practice associated with developing and using digital technologies to improve health. It expands the concept of eHealth to include artificial intelligence, machine learning, big data, the Internet of Things (IoT), etc. If incorporated into the broader care system, it could help elderly people and their caregivers.

As life expectancy increases, the number of requests for nursing homes increases as well, and applicants often face long waiting times because the demand exceeds available capacities. Also, many elderly people cannot afford to stay there.

It is often the case that people do not want to leave their home environment where they have spent the majority of their lives and go to a nursing home. The question is, can technology help them to stay independent and safe at home longer? Today, there is a wide range of aids that can help the elderly feel safer: emergency call necklaces, mobile phones with fall sensors, and applications to automatically notify emergency services, etc. However, these aids are mostly closed, standalone systems that the elderly often struggle to use, either because they do not know how to use them, forget to use them in critical situations, or simply forget to change or charge the batteries. When the elderly person might need help could be assessed by monitoring Activities of Daily Living (ADL) [1], which is the topic of this chapter.

The chapter is organized as follows. In Section 2, the basics of ADL recognition are described. Sensors and networks that are commonly used for ADL recognition are given in Section 3. Once the network is set up, the next step is data collection. Because this is a time-consuming and expensive task, some publicly available datasets are referenced in Section 4. Sections from 5 to 7 describe major steps in ADL recognition system construction, from data preprocessing to evaluating the final system. ADL recognition environments differ in user types, sensor technologies, and environmental conditions. Section 8 is about transfer learning approaches in which previously learned knowledge in one ADL recognition environment is utilized to model a new but related ADL recognition environment. One of the goals of ADL is early anomaly detection in user behavior. Section 9 is devoted to this topic. The chapter concludes with some problems that are still open and some ideas for future work.

2. Recognition of activities of daily living

2.1 Activities of daily living

Activities of daily living were defined in the 1950s by geriatrician Sidney Katz [2]. He determined six basic ADLs: bathing, dressing, toileting, transferring (i.e., getting in and out of a chair or bed without assistance), continence, and feeding. In the late 1960s, Instrumental ADLs were additionally defined to measure a greater range of activities needed for independence and indicate disabilities that might not show up using the ADL alone (e.g., managing finances and taking medications). Instrumental ADLs are more complex and challenging to recognize. Although both types of activities are important, basic ADLs are usually recognized by ADL recognition systems. The set of daily activities varies from study to study. Some studies, especially those based on wearable sensors, instead of basic activities, recognize movement-related activities like walking, walking upstairs, walking downstairs, sitting, standing, etc.

2.2 ADL recognition system

ADL recognition and monitoring systems in Ambient Assisted Living (AAL) environments automatically recognize the daily activities of residents in smart homes. In contrast to using elderly aids, in most cases, residents do not interact directly with such systems as they are part of their environment or installed in their homes. These systems aim to enable elderly people to live longer in their home environment, be independent, have a high quality of life, and ultimately reduce the costs of society and healthcare systems. Automated monitoring of the daily routines of older individuals could recognize early indicators of needing assistance. It is important to mention that such systems must ensure residents’ confidentiality, privacy, and autonomy.

ADL recognition systems can be broadly classified into vision-based and sensor-based systems. Historically, vision-based systems appeared first. They use cameras to capture information and computer vision techniques to recognize activities. There are many issues related to these approaches. Privacy is the primary concern. Today, sensor-based approaches are much more popular due to low costs and advancements in sensor technology. These are technologies that intrude significantly less into the user’s privacy. Sensor-based approaches are further divided into solutions based on wearable sensors, solutions based on sensors attached to objects, solutions based on environmental sensors, and solutions based on a combination of different types of sensors. In approaches based on wearable sensors, users must carry the sensors with them as they perform activities. As mentioned in the introduction, this could be problematic for elderly people. They do not want to use them or forget to use them. In approaches based on sensors attached to objects, users must use these objects when performing daily activities. This can also be problematic, as we force them to change their habits. The advantage of approaches based on environmental sensors is that they do not alter how a person performs any activity. These approaches bring new problems that need to be addressed, such as noise and interference in sensor data caused by the environment.

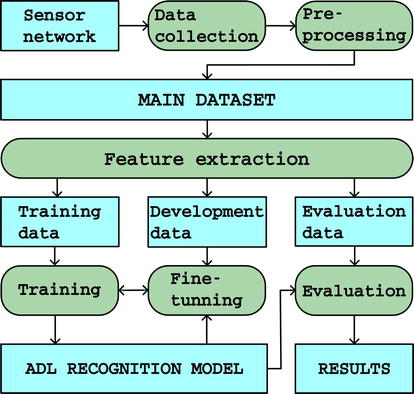

In this chapter, we focus on sensor-based approaches. The setup of the ADL recognition system for research purposes is depicted in Figure 1. The central part of the system is the ADL recognition model. Each step in the system’s development is described in a separate section. In the continuation of the chapter, instead of ADL recognition system, we will use the term ADL system.

Figure 1.

General data flow for training and evaluating ADL systems.

3. Sensors and networks

The first step in building an ADL system is selecting and deploying sensors and setting up the network for data transmission.

Wearable sensors are attached to the person’s body to measure parameters of interest. Accelerometers are the most broadly used wearable sensors [3]. In some cases, they are combined with other sensors, such as a gyroscope, magnetometer, GPS, and pressure sensor. To measure physiological signals, additional biometric sensors would be required, such as those for body temperature, ECG, blood pressure, oxygen, etc. However, many of them are not useful in the context of ADL recognition. For example, after performing physically demanding activities (e.g., running), the heart rate remains high for a while, even if the individual is lying or sitting. ADL system can utilize the sensor data from a smartphone [4]. In the case of using one smartphone, we have a single point of contact. To have more of them, smartphones are combined with other wearable sensors on different body parts to capture the subject’s movements fully. The popularity of wearable sensors has given rise to a new technology called Body Sensor Network (BSN). BSNs collect data from wearable sensors, process them, and extract useful information. As pointed out in the previous section, wearing sensors is not often feasible. Wearable sensors are well-suited for applications in physical therapy, enabling accurate recognition of movements. They are probably not the best choice for ADL recognition for elderly people.

Objects of daily use could be tagged with RFID tags [5]. Tags are small chips that can be easily attached to any object. These tags could be active or passive. Active tags have their own power supply, while passive tags are battery-less and harvest their energy from the radio waves of the RFID readers. Active tags have a longer range as compared to passive tags. A reader is a device employed for gathering information from RFID tags. Equipped with an antenna emitting radio waves, the reader interacts with RFID tags by receiving and modulating the radio waves. RFID tags transmit information, including their unique identifier. Data from RFID tags is commonly used in combination with data from accelerometers. The user wears a device consisting of an accelerometer and an RFID reader. As pointed out, wearing an RFID reader is problematic for elderly people.

The idea of using environmental sensors is to deploy sensors in the environment where the activities are being performed [6, 7, 8]. Environmental sensors are static devices that capture measures in the environment in which they are deployed. Environmental sensors can be binary sensors with two possible states (e.g., ON/OFF) or numerical sensors that provide values within a predetermined range. In most studies, passive infrared (PIR) sensors are utilized to monitor daily activities. With the help of this sensor, general movement or presence in a room can be detected. The next sensor is an ultrasonic sensor, which determines the distance to objects or presence in a precisely defined location due to its narrow operating angle. A temperature sensor detects changes in the ambient temperature. They can also be used near heat sources such as a stove to detect meal preparation. Magnetic sensors can be used to determine whether doors of rooms or apartments are open or closed. This can help determine which rooms the observed person has entered. The power consumption sensor indicates the use of electrical devices. A water flow sensor can show water use in a sink or shower, indicating the resident’s personal care. Pressure sensors can be used on different surfaces such as the floor, chair, toilet, or under the bed mattress. Information from these sensors can reveal how much time a person has spent sitting on a chair while eating a meal or using the toilet. A carbon dioxide

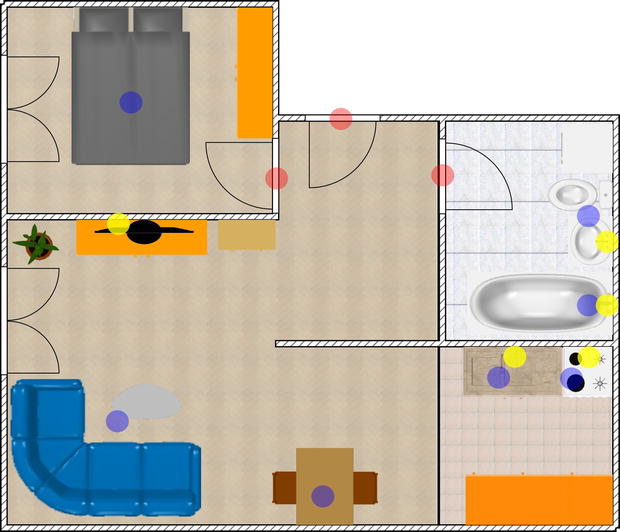

Careful placement of sensors at crucial locations in an apartment enables accurate recognition of activities, given that most activities are significantly dependent on their location. Figure 2 shows the recommended sensor locations.

Figure 2.

Locations of environmental sensors in a hypothetical apartment. Motion sensors are marked in blue, door sensors are marked in red, and power/waterflow sensors are marked in yellow.

Communication is often the most expensive operation. Sensors in the network can be connected by wires or by wireless connections. With a wired connection, communication between sensors and the base station is very fast and reliable. However, this type of connection is only suitable for a smaller number of sensors, as wiring becomes challenging and cost-intensive for a larger number of sensors. It is much easier to use wireless connections. In most cases, short range wireless networks are preferred over long-range networks as the former require lower power, and wireless communication usually constitutes the largest part of the power consumption. Radiofrequency (RF) and WiFi technologies are the most frequently used. For RF, the frequency bands are 433 MHz and 868 MHz (in some regions, 915 MHz). In the case of WiFi, data could be exchanged on two frequency bands, at 2.4 GHz and 5 GHz. The idea is to minimize the amount of transmitted data. If feature extraction and ADL recognition are performed on integrated devices, raw signals do not have to be continuously sent to the server, and network traffic is minimized.

4. Data collections

For training ADL systems, large collections of data are needed. Collecting proprietary data is a very time-consuming and costly process. It could be avoided by using a publicly available dataset. Another advantage of using public datasets is the performance evaluation and comparison to published research. The research community has developed many datasets. We review the ones that have provided the most significant impact on the development of research related to ADL recognition.

Table 1 lists datasets where data was captured by the use of wearable sensors. The Opportunity dataset [9] allows multi-patient experimentation. Residents were monitored in the kitchen performing daily morning activities: waking up, grooming, making breakfast, and cleaning. Sensors were located on the participant’s body, attached to objects, and fixed in the environment. The mHealth dataset [10] contains body motion and vital sign recordings for persons performing several physical actions, like standing still, cycling, jogging, running, jumping front and back, etc. Sensors were located on each person’s chest, right wrist, and left ankle. Similar activities were performed by participants in the SBA dataset [11]. Each participant performed each activity for 3–4 minutes. Participants were equipped with five smartphones in five body positions. In the USC-HAD dataset [12], persons perform 12 different activities of the same type as in the previous two datasets. The sensing hardware was attached to the person’s front right hip. All referenced datasets are labeled with activities. In recent years, datasets containing no prior labeling of the activities have been studied [13]. Interpreting and using such data imposes significant challenges in developing appropriate ADL recognition techniques.

| Dataset | Occupancy | Size | Sensors |

|---|---|---|---|

| Opportunity [9] | 12 | 25 hours | W + O + E |

| mHealth [10] | 10 | 196,608 samples | W |

| SBA [11] | 10 | 630,000 samples | W |

| USC-HAD [12] | 14 | 84 hours | W |

Table 1.

Especially for elderly people, wearing sensors all the time is problematic. Table 2 lists datasets where data is captured exclusively by using environmental sensors. Van Kasteren dataset [7] contains data of 14 environmental sensors that changed the state when a resident acted, for example, opened, or closed the door. The stored sensor values are binary. CASAS repository [6] contains a wide variety of datasets. Datasets differ in the number and types of sensors from which data is collected and the number and type of activities manually assigned to the data. ARAS dataset [14] contains binary sensor readings of 2 residents for 30 days. MIT [15] is a small dataset of 17 days collected with approximately 80 PIR sensors installed in everyday objects such as drawers, refrigerators, containers, etc. All datasets reported in Table 2 contain prior labeling of user activities. Some datasets lack labels for activities, while others are only partially labeled [6].

| Dataset | Occupancy | Size | Sensors |

|---|---|---|---|

| Van Kasteren [7] | 1 (in 2 houses) | 19/25 days | M, D, I |

| CASAS Cairo [6] | 2 + dog | 57 days | M, T |

| CASAS Milan [6] | 1 + pet | 72 days | M, D, T |

| CASAS Kyoto 7 [6] | 2 | 46 | M, D, I, B, HW, CW |

| CASAS Kyoto 8 [6] | 2 | 62 | M, D, I, T, Pwr |

| CASAS Kyoto 11 [6] | 2 | 232 | M, D, I, T, Pwr |

| ARAS [14] | 2 | 30 days | F, D, M, P, L, T, IR |

| MIT [15] | 1 | 17 days | I, B, D, A, HW, CW, LS |

Table 2.

Datasets collected by environmental sensors. The sensors used are as follows: Motion (M, on/off), temperature (T, numeric), door, drawer, cabinet, etc. (D, open/close), item (I, present/absent), power consumption (Pwr, numeric), burner (B, on/off), hot and cold water sensors (HW and CW, numeric), light switch (LS, on/off), force/pressure (F, on/off), IR receiver (IR active/not active), appliances (a, on/off), proximity (P, on/off), light detection (L, on/off).

5. Data preprocessing, feature extraction, and feature selection

Data from sensors is stored in the form of time series. Datasets collected by wearable sensors consist of raw accelerometer and gyroscope data, possibly augmented with data from other sensors. Every sensor data point is recalculated into a data point representing the relative change of the position and orientation of the sensing device.

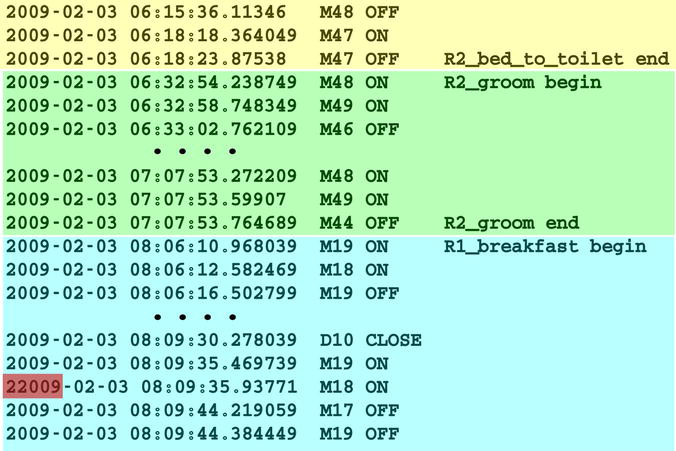

Datasets collected by environmental sensors have different structures. Figure 3 shows an excerpt of the CASAS Kyoto7 dataset, where each line has a time stamp, the ID of the sensor, the sensor state, and in certain cases, the start or end of the activity tag. Other datasets with environmental sensors have similar structures.

Figure 3.

Excerpt from raw sensor data in CASAS Kyoto7 dataset. Colored parts represent different activities; the dark-shaded part is an example of an error in the dataset.

It often happens that some data is missing or disturbed by environmental noise. Some preprocessing is usually required to complete the missing data. The typical approaches to dealing with missing values include: manually adding missing values that can be trivially determined; removing the examples with missing values from the dataset (that can be done if the dataset is big enough to sacrifice some training examples); keeping data with missing values if using a machine learning algorithm for ADL recognition that can deal with missing values; using a data imputation technique to replace the missing values with average values or values in the middle of the range. In some cases, raw data is passed through a segmentation process in which the continuous sequence of sensor data is divided into individual segments. Each segment represents a specific activity.

Data preprocessing is followed by feature extraction and feature selection. A set of features with good discrimination can yield better recognition results. Various features can be extracted from the preprocessed sensor data. The same features are used in the training and evaluation of ADL systems. There are two main types of data features: time- and frequency-domain features. However, time-domain features are more commonly used [16]. Frequency-domain features are used in the case of wearable sensors. They have higher computation costs because of the extra Fourier transform calculation. In [17], time-domain statistical features (i.e., mean, variance, standard deviation) were combined with frequency-domain features (mel-frequency cepstral coefficients extracted from mobile phone microphone data).

When using environmental sensors, the number of features is usually the same as the number of sensors installed in a smart home. Since all the sensors are part of a feature set, the features representing inactive sensors are assigned zeros.

Not all features are helpful in recognizing the activities effectively. In the process called feature selection, the most informative features are chosen. In [18], information gain was used to identify the important sensors in multienvironment sensor data. Principal Component Analysis (PCA) was used in [19] to reduce the dimensionality of the features extracted from the accelerometer sensor embedded in a smartphone.

Feature extraction and feature selection pack data into feature vectors, which are then used as input data to train ADL recognition algorithms. If neural network models are used for ADL recognition, feature extraction and feature selection are embedded into a model, as neural networks can automatically extract the relevant features [20].

6. ADL recognition algorithms

ADL recognition can be formulated as a classification problem. The task of the ADL algorithm is to classify feature vectors into a predefined number of classes, where each class represents one activity. Different classification techniques can be applied.

The Naïve Bayes classifier was one of the first classifiers used for ADL recognition [21]. It is based on the Bayes theorem for conditional probability and assumes conditional independence of the feature vectors. It classifies feature vectors into classes by maximizing the posterior probability.

The early work on ADL also used the hidden Markov model (HMM) to recognize activities from sensor data. The basic model was advanced in different ways to model long-range dependencies. In [7], a hidden semi-Markov model (HSMM) was used, which models the duration of the hidden state. It outperformed basic HMM. Conditional random fields (CRFs) also show good performance in modeling duration. In [22], HMM was extended with a Markov chain model suitable for activity sequences, which can model longer dependencies between activities. A large number of transitions between activities were avoided by introducing the cost of activity transition.

Support vector machines (SVM) are better adapted to problems with small datasets. SVM is a binary classifier but can be transformed to solve multiclass problems. In [23], a “one-vs-rest” approach with a majority vote was used.

In [16], variants of the K-nearest neighbor (KNN) classifier were used with datasets from CASAS. One modification of KNN was based on belief assignment. The first belief represented the overall probability of an activity instance belonging to a particular activity class, and the second belief, also known as plausibility, was the conditional probability of an activity instance belonging to a particular class, given that the class of its neighbors is known. Another modification called Fuzzy KNN assigned a degree of class membership to an activity instance rather than associate the instance to a particular activity class.

In recent years, neural networks and deep learning-based methods have gained popularity. Neural networks inherently learn features from raw data, eliminate noise from data, and solve the problems of intraclass differences and interclass similarities. Convolutional neural networks (CNN) can capture the local dependency and scale invariance of a signal. In [20], CNN was used for ADL recognition using mobile sensors. Good results were obtained with the Opportunity dataset. CNNs were used with environmental sensors as well. In [24], activity data was segmented using a sliding window and then converted into binary activity images. 2D CNNs were used for classification. The architecture of the CNN classifier consisted of three convolutional layers followed by pooling layers as a feature extraction part and neural networks with three fully connected layers as a classification part. In [25], colored pixels were proposed to encode sensor information that is not binary. CNNs were also used in [18], where the spatial distance matrix was constructed, in which distances between sensors were stored. A wide time-domain CNN was applied with a larger convolution kernel size, two fully connected layers, and a softmax activation function. Increasing the convolution kernel size can capture more time-related relationships in the time-series data.

Recurrent neural networks (RNN) are suitable for processing time-series data of environmental sensors because they can remember early information in the sequence. When the time-series span is large, the phenomenon of gradient disappearance can occur. LSTM has difficulty finding a suitable time scale to balance long-term and short-term temporal dependencies. Long short-term memory (LSTM) with a stacked structure was a powerful approach in [26]. The drawback of RNN is its slow speed.

ADL system can employ different base classifiers with complementary characteristics to enhance the recognition performance [27, 28].

7. Evaluation of results

For evaluation, the data is split into three subsets: training, development, and evaluation. The ratios of the sets can be, for example, 80–10–10%. Classification models are trained using a training set, parameters are fine-tuned using a development set, and the performance of the final system is evaluated using the evaluation set. There should be no intersection between these three sets. In cases of small datasets, 3-fold cross-validation solves the problem.

Different measures assess the performance of an ADL system. The confusion matrix summarizes how successful the system is at predicting examples belonging to various activities. Rows in the confusion matrix represent the activities that the model predicted, and the columns represent the actual activities. The confusion matrix for the case of three activities is given in Table 3.

| Predicted | ||||

|---|---|---|---|---|

| Actual | 1 | 2 | 3 | |

| 1 | ||||

| 2 | ||||

| 3 | ||||

| Total | ||||

Table 3.

Confusion matrix.

For a long time, accuracy was the only metric in use:

The F1 score combines precision and recall using their harmonic mean.

Because, in most cases, datasets are unbalanced,

Evaluation is closely connected to the size and characteristics of the dataset. ADL systems also differ in activity sets, approaches, and experimental setups.

Tables 4 and 5 report cited ADL systems from the literature. The effectiveness of learning techniques is not directly comparable because they use different datasets and consequently different splits into training, development, and evaluation subsets. The systems also recognize various sets of activities. There is no standard setup or benchmark (i.e., benchmark dataset) for comparable evaluation of an ADL system performance. However, the general observation is that the obtained results show promise for practical use. It is also evident that they have improved over time.

| Sensors | Activities | Ref. | |

|---|---|---|---|

| 1. | Wearable | WALKING, running, typing, etc. | [13] |

| 2. | Wearable | Walking, running, typing, etc. | [13] |

| 3. | Environmental | Brush teeth, showering, toileting etc. | [7] |

| 4. | Environmental | Breakfast, dinner, snack, etc. | [7] |

| 5. | Environmental | Breakfast, dinner, snack, etc. | [22] |

| 6. | Wear. + envir. + mic. | Toilet use, eating, communication, etc. | [23] |

| 7. | Environmental | Toilet, leave home, dinner, etc. | [16] |

| 8. | Wearable | Jogging, walking, descending stairs, etc. | [20] |

| 9. | Environmental | Leave home, meal preparation, relax | [24] |

| 10. | Environmental | Leave home, dinner, laundry, etc. | [25] |

| 11. | Environmental | Leave home, dinner, laundry, etc. | [25] |

| 12. | Environmental | Leave home, dinner, laundry, etc. | [18] |

| 13. | Environmental | Leave home, dinner, laundry, etc. | [18] |

| 14. | Environmental | Leave home, sleeping, dinner, etc. | [28] |

| 15. | Environmental | Leave home, sleeping, dinner, etc. | [28] |

| 16. | Wearable | Walking, running, sitting, standing, etc. | [27] |

Table 4.

ADL recognition systems based either on wearable or environmental sensors, except for system number 6, which utilizes both and includes speech recognition. Only a few activities are listed. For the complete list of activities, the reader is referred to the associated reference.

| Learning algorithm | Accuracy | F1 | |

|---|---|---|---|

| 1. | KNN | — | 75.73% |

| 2. | Random forest | — | 88.75% |

| 3. | HSMM | — | 76.4% |

| 4. | CRF | — | 67.0% |

| 5. | Extended HMM | 94.52% | — |

| 6. | SVM | 86% | — |

| 7. | Fuzzy kNN | 97.44% | — |

| 8. | CNN | 96.88% | — |

| 9. | CNN | — | 79% |

| 10. | CNN | 95.2% | — |

| 11. | CNN | 97.8% | — |

| 12. | LSTM | 96.46% | — |

| 13. | CNN | 97.08% | — |

| 14. | Hybrid HMM + SVM | — | 76% |

| 15. | Hybrid HMM + NN | — | 71% |

| 16. | Ensemble | 95% | — |

Table 5.

Learning algorithms used for recognizing activities in ADL systems, reported in Table 4 along with the obtained ADL recognition results expressed as average accuracy or F1 score. Results are not directly comparable.

In some real-world scenarios, correct recognition of activity sequences is more important than individual activities. Therefore, it is even more difficult to compare ADL systems for evaluation.

8. Transfer learning

ADL systems and datasets reported in the literature are very diverse. They differ in the type of residents, sensor types and layout, environmental conditions, and activities recognized. Transfer learning is an approach in which previously learned knowledge is utilized to model a new but related setting. For example, the setting of environmental sensors in new apartments is different, or the residents in the apartment change. Transfer knowledge from one setting to the other can reduce the need to collect and label new data and to train a new recognition model from scratch.

Transfer knowledge was most frequently used with data from mobile and other wearable devices with sensors such as accelerometers and gyroscopes. Instance transfer and feature-representation transfer are the two most common techniques utilized in transfer learning.

Instance transfer was used in [29]. In their experiment, accelerometer data was collected from the user, who was asked to perform a specific task such as walking, running, and bicycle riding. Two new users did not want to label their accelerometer data. The data of the first user was reweighted by the covariate shift adaptation technique and combined with a probabilistic classification algorithm to recognize the activities of the new users. The idea of covariate shift is that even though the distributions of input points change from one user to another, the conditional distribution of class labels given input points remains unchanged.

Feature-representation transfer was applied in [8] in an experiment with two apartments. One apartment had labeled data available, while another had only unlabeled data. The arrangement and installation of sensors in both apartments were different, along with the profiles of the residents. Sensor mapping and a semi-supervised learning approach, in which labeled and unlabeled training data were combined to find the parameters for the HMM, improved the ADL recognition results.

Deep neural networks are particularly suited to transfer learning, as learning is achieved layerwise, enabling the transfer of selected layers from one network to the other. In [30], layers of the target network were progressively added, and weights of only the most recently added hidden layer and the classification layer were trained by gradient descent. All previous layers were frozen. A new hidden layer and classification layer were trained with features transferred from the source network.

9. Anomaly detection in user behavior

The existing solutions are mainly dealing with recognizing individual activities. The idea of behavior recognition is to infer a person’s behavior by studying the activity sequences. Any abnormality in a person’s behavior can be detected by knowing how to characterize normal behavior. This is particularly important in cases of elderly people. If abnormal behavior of an elderly person is detected, the concerned people can be informed of the situation.

There is no uniform definition of abnormal activity or abnormal behavior. In general, this is the activity or behavior that occurs rarely or is not expected to occur [31]. In literature, abnormal activities were mainly recognized through visual data [32], which is beyond the scope of the chapter.

In [5], an RFID-based system for monitoring the activities of Alzheimer’s patients was set. The basic idea was to track a user’s movement from one room to another and report any abnormal situation (e.g., staying in the washroom for a longer time).

With the help of medical models provided by cognitive neuroscience researchers, techniques to identify short-term and long-term abnormal activity routines were developed [33]. The focus was on instrumental ADLs. Short-term behavioral anomalies that were recognized were categorized as omissions, commissions, and additions. The omission occurs when an action or a sequence of actions that constitute an instrumental ADL is not carried out (e.g., “the elderly person forgets to consume a meal after having prepared it”). The commission occurs when instrumental activity is performed inaccurately (e.g., “putting butter in a nonrefrigerated storage”). The addition takes place when unnecessary actions are carried out to complete the current activity. (e.g., “the elderly retrieves a food item not needed for lunch preparation”). Long-term abnormal behaviors refer to groups of activities and anomalies observed over relatively extended periods (ranging from one week to several months). These behaviors exhibit significant deviations from the typical trends observed in the past and may signal the onset of cognitive impairment. Long-term abnormal behaviors were personalized and tailored to trends observed as “normal” for a specific person or person’s profile. In their ADL system, different sensing devices like environmental sensors and RFID-tagged objects were used.

In [31], a causal association rule mining algorithm was proposed to detect abnormal behavior. The sensor data was annotated with anomalies by experts. Anomaly causes were afterward automatically extracted from the dataset using causal association rules. To also consider data uncertainty, a Markov logic network was used to predict anomalies. Their system also recommends suitable actions to avoid the occurrence of an actual anomaly.

Identifying the habits of a resident is crucial to distinguish between common and uncommon behavior. In [34], usual behavior patterns were defined as partitions in a clustering algorithm used with ADL data. Changes in those patterns could be declared as unusual and may be indicators of declining health.

In [35], sensor data of multiple smart home residents were investigated to detect differences between groups of people. Group-level discrepancies may help isolate behaviors that manifest in a routine of daily activities due to health problems. A framework called Behavior Change Detection for Groups was developed based on change point detection methods for analyzing changes in time series data. Experiments included two groups of people. One group contained individuals with the same health/behavioral trait, and the second group contained age-matched individuals who did not exhibit the same health/behavioral trait as the first group. Statistically significant changes were detected, including changes in duration, complexity, and pattern of ADL.

Smart home technologies provide a new paradigm to deliver remote health monitoring. In [36], a clinician-in-the-loop visual interface was designed that provides clinicians with patient behavior patterns derived from smart home data. Clinicians can observe behavior fluctuations, which allows for early detection of health events and decline. Making recommendations in the healthcare domain is not an easy task due to its complexity. In [37], a framework is presented that includes a fuzzy logic-based decision support system that provides information about the suspected disease of an elderly person and its severity. The system is based on the similarity score computed for each activity during a day concerning the same activity in the normal behavior pattern. Consequently, these scores serve as a marker for changes in the typical behavior of the elderly person during a specific activity. A significant decline in these scores over an extended period, ranging from a few days to a few weeks, could serve as an early indicator of deterioration, prompting a notification to the caregivers or family members.

10. Challenges for further work

Recognition of activities of daily living for elderly people has seen tremendous improvements. Challenges seem to be well tackled by deep learning algorithms. State-of-the-art developments are mostly based on either LSTM or CNN. However, current ADL recognition systems still have some problems that need to be addressed in the future. Multi-resident scenarios pose problems as the activities of two or more residents intertwine. Concurrent activities (i.e., multiple activities simultaneously) are also challenging for future work. Current systems can also not cope with the variability between different residents in performing the same activity. If a different person performs the same activity or the same person performs the activity at a different pace, the performance of a system degrades.

Recent advances in machine learning from other fields offer opportunities for significant advances in smart homes. For example, advances in natural language processing could be adopted to infer semantic knowledge between activities [38].

Fusing data from heterogeneous sources is also a challenge, as it has been shown that a model developed using one data type does not perform well with other data types. There is still a lack of real data collected with residents who have developed signs of dementia or cognitive ability loss in general.

Many challenges still have to be faced for ADL recognition and monitoring systems to be deployed in real-use cases. The research community needs to define benchmarks and standardize the evaluation metrics to reflect the requirements for a real-use deployment and enable fair algorithm comparison.

11. Conclusion

In this chapter, we reviewed the core literature on methods and systems for ADL recognition. After a thorough literature review, we can conclude that the field has developed rapidly in the last two decades. If enough data is available, deep learning and neural network architectures provide very good results. In cases where we do not have enough data, transfer learning could mitigate the problem.

ADL systems based on environmental sensors are better accepted among final users as they do not unveil too much private information. Their advantage is also that they do not require a change in the way the residents perform daily activities. However, social barriers still impede the adoption of smart homes and must be addressed in the future. Overcoming these barriers necessitates trustworthy privacy-preserving data management and reliable cyber-secure ADL systems.

Acknowledgments

This work was supported by the Slovenian Research and Innovation Agency ARIS (research core funding No.P2-0069-Advanced Methods of Interaction in Telecommunications).

References

- 1.

Mlinac ME, Feng MC. Assessment of activities of daily living, self-care, and independence. Archives of Clinical Neuropsychology. 2016; 31 (6):506-516 - 2.

Wallace M, Shelkey M, et al. Katz index of independence in activities of daily living (ADL). Urologic Nursing. 2007; 27 (1):93-94 - 3.

Lara OD, Labrador MA. A survey on human activity recognition using wearable sensors. IEEE Communications Surveys & Tutorials. 2012; 15 (3):1192-1209 - 4.

Shoaib M, Bosch S, Incel OD, Scholten H, Havinga PJ. A survey of online activity recognition using mobile phones. Sensors. 2015; 15 (1):2059-2085 - 5.

Raad MW, Sheltami T, Soliman MA, Alrashed M. An RFID based activity of daily living for elderly with Alzheimer’s. In: Internet of Things (IoT) Technologies for HealthCare: 4th International Conference, HealthyIoT 2017; October 24-25, 2017; Angers, France, Proceedings 4. Angers, France: Springer; 2018. pp. 54-61 - 6.

Cook DJ, Crandall AS, Thomas BL, Krishnan NC. CASAS: A smart home in a box. Computer. 2012; 46 (7):62-69 - 7.

Van Kasteren T, Englebienne G, Kröse BJ. Activity recognition using semi-Markov models on real world smart home datasets. Journal of Ambient Intelligence and Smart Environments. 2010; 2 (3):311-325 - 8.

van Kasteren T, Englebienne G, Kröse BJ, et al. Recognizing activities in multiple contexts using transfer learning. In: AAAI Fall Symposium: AI in Eldercare: New Solutions to Old Problems. Washington, DC, USA: Association for the Advancement of Artificial Intelligence; 2008. pp. 142-149 - 9.

Roggen D, Calatroni A, Rossi M, Holleczek T, Förster K, Tröster G, et al. Collecting complex activity datasets in highly rich networked sensor environments. In: 2010 Seventh International Conference on Networked Sensing Systems (INSS). Kassel, Germany: IEEE; 2010. pp. 233-240 - 10.

Banos O, Garcia R, Holgado-Terriza JA, Damas M, Pomares H, Rojas I, et al. mHealthDroid: A novel framework for agile development of mobile health applications. In: Ambient Assisted Living and Daily Activities: 6th International Work-Conference, IWAAL 2014; December 2-5, 2014; Belfast, UK Proceedings 6. Belfast, United Kingdom: Springer; 2014. pp. 91-98 - 11.

Shoaib M, Bosch S, Incel OD, Scholten H, Havinga PJ. Fusion of smartphone motion sensors for physical activity recognition. Sensors. 2014; 14 (6):10146-10176 - 12.

Zhang M, Sawchuk AA. USC-HAD: A daily activity dataset for ubiquitous activity recognition using wearable sensors. In: Proceedings of the 2012 ACM Conference on Ubiquitous Computing. Pittsburgh, PA, United States: Association for Computing Machinery; pp. 1036, 2012-1043 - 13.

Bai L, Yeung C, Efstratiou C, Chikomo M. Motion2Vector: Unsupervised learning in human activity recognition using wrist-sensing data. In: Adjunct Proceedings of the 2019 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2019 ACM International Symposium on Wearable Computers. London, United Kingdom: Association for Computing Machinery; 2019. pp. 537-542 - 14.

Alemdar H, Ertan H, Incel OD, Ersoy C. ARAS human activity datasets in multiple homes with multiple residents. In: 2013 7th International Conference on Pervasive Computing Technologies for Healthcare and Workshops. Venice, Italy: IEEE; 2013. pp. 232-235 - 15.

Tapia EM, Intille SS, Larson K. Activity recognition in the home using simple and ubiquitous sensors. In: International Conference on Pervasive Computing. Vienna, Austria: Springer; 2004. pp. 158-175 - 16.

Fahad LG, Tahir SF. Activity recognition in a smart home using local feature weighting and variants of nearest-neighbors classifiers. Journal of Ambient Intelligence and Humanized Computing. 2021; 12 :2355-2364 - 17.

Ferreira JM, Pires IM, Marques G, Garcia NM, Zdravevski E, Lameski P, et al. Activities of daily living and environment recognition using mobile devices: A comparative study. Electronics. 2020; 9 (1):180 - 18.

Li Y, Yang G, Su Z, Li S, Wang Y. Human activity recognition based on multienvironment sensor data. Information Fusion. 2023; 91 :47-63 - 19.

Sukor AA, Zakaria A, Rahim NA. Activity recognition using accelerometer sensor and machine learning classifiers. In: 2018 IEEE 14th International Colloquium on Signal Processing & its Applications (CSPA). Penang, Malaysia: IEEE; 2018. pp. 233-238 - 20.

Zeng M, Nguyen LT, Yu B, Mengshoel OJ, Zhu J, Wu P, et al. Convolutional neural networks for human activity recognition using mobile sensors. In: In 6th International Conference on Mobile Computing, Applications and Services. Austin, TX, United States: IEEE; 2014. pp. 197-205 - 21.

Chen L, Hoey J, Nugent CD, Cook DJ, Yu Z. Sensor-based activity recognition. IEEE Transactions on Systems, Man, and Cybernetics, Part C (Applications and Reviews). 2012; 42 (6):790-808 - 22.

Donaj G, Maučec MS. Extension of HMM-based ADL recognition with Markov chains of activities and activity transition cost. IEEE Access. 2019; 7 :130650-130662 - 23.

Fleury A, Vacher M, Noury N. SVM-based multimodal classification of activities of daily living in health smart homes: Sensors, algorithms, and first experimental results. IEEE Transactions on Information Technology in Biomedicine. 2009; 14 (2):274-283 - 24.

Gochoo M, Tan T-H, Liu S-H, Jean F-R, Alnajjar FS, Huang S-C. Unobtrusive activity recognition of elderly people living alone using anonymous binary sensors and DCNN. IEEE Journal of Biomedical and Health Informatics. 2018; 23 (2):693-702 - 25.

Tan T-H, Gochoo M, Huang S-C, Liu Y-H, Liu S-H, Huang Y-F. Multi-resident activity recognition in a smart home using RGB activity image and DCNN. IEEE Sensors Journal. 2018; 18 (23):9718-9727 - 26.

Liciotti D, Bernardini M, Romeo L, Frontoni E. A sequential deep learning application for recognising human activities in smart homes. Neurocomputing. 2020; 396 :501-513 - 27.

Khowaja SA, Yahya BN, Lee S-L. Hierarchical classification method based on selective learning of slacked hierarchy for activity recognition systems. Expert Systems with Applications. 2017; 88 :165-177 - 28.

Ordónez FJ, De Toledo P, Sanchis A. Activity recognition using hybrid generative/discriminative models on home environments using binary sensors. Sensors. 2013; 13 (5):5460-5477 - 29.

Hachiya H, Sugiyama M, Ueda N. Importance-weighted least-squares probabilistic classifier for covariate shift adaptation with application to human activity recognition. Neurocomputing. 2012; 80 :93-101 - 30.

Du X, Farrahi K, Niranjan M. Transfer learning across human activities using a cascade neural network architecture. In: Proceedings of the 2019 ACM International Symposium on Wearable Computers. London, United Kingdom: Association for Computing Machinery; 2019. pp. 35-44 - 31.

Hela S, Amel B, Badran R. Early anomaly detection in smart home: A causal association rule-based approach. Artificial Intelligence in Medicine. 2018; 91 :57-71 - 32.

Dhiman C, Vishwakarma DK. A review of state-of-the-art techniques for abnormal human activity recognition. Engineering Applications of Artificial Intelligence. 2019; 77 :21-45 - 33.

Riboni D, Civitarese G, Bettini C. Analysis of long-term abnormal behaviors for early detection of cognitive decline. In: 2016 IEEE International Conference on Pervasive Computing and Communication Workshops (PerCom Workshops). Sydney, Australia: IEEE; 2016. pp. 1-6 - 34.

Sepesy Maučec M, Donaj G. Discovering daily activity patterns from sensor data sequences and activity sequences. Sensors. 2021; 21 (20):6920 - 35.

Sprint G, Cook DJ, Fritz R. Behavioral differences between subject groups identified using smart homes and change point detection. IEEE Journal of Biomedical and Health Informatics. 2020; 25 (2):559-567 - 36.

Ghods A, Caffrey K, Lin B, Fraga K, Fritz R, Schmitter-Edgecombe M, et al. Iterative design of visual analytics for a clinician-in-the-loop smart home. IEEE Journal of Biomedical and Health Informatics. 2018; 23 (4):1742-1748 - 37.

Zekri D, Delot T, Thilliez M, Lecomte S, Desertot M. A framework for detecting and analyzing behavior changes of elderly people over time using learning techniques. Sensors. 2020; 20 (24):7112 - 38.

Bouchabou D, Nguyen SM, Lohr C, LeDuc B, Kanellos I. A survey of human activity recognition in smart homes based on IoT activity recognition using semi-Markov models on real world smart home datasets. Sensors algorithms: Taxonomies, challenges, and opportunities with deep learning. Sensors. 2021; 21 (18):6037