Angular sizes of various structures.

Abstract

We present a new model of cosmology based on the idea that the curvature of the universe varies with time. This model predicts a present-day exponential acceleration of the expansion of the universe without reference to a cosmological constant. We propose a new model of nucleosynthesis that accounts for the existence of cosmic structures, provides a solution to the matter/antimatter asymmetry problem, and explains the origin of the CMB. We show that this process was regulated by a vacuum imprint that came into existence during an initial Planck era inflation. One consequence is that all cosmic structures came into their final form with their present-day mass more or less simultaneously at a time of about

Keywords

- evolution of the universe

- inflation

- big bang nucleosynthesis

- cosmic microwave background

- origin of cosmic structures

- dark matter

- dark energy

- supermassive black holes

- baryonic acoustic oscillations

- Hubble tension

1. Introduction

It has long been thought that the

We will begin with a brief review the assumptions underlying the standard model and contrast these with those of the new model. First, the standard model assumes that the universe is homogeneous and isotropic on large scales. In the new model, we also make that assumption with, however, a major difference. Whereas the standard model asserts that the curvature of spacetime is constant, we assert that it varies with time and the consequences of that are huge. With time-varying curvature, the universe must undergo a present-day exponential acceleration of its expansion that has nothing to do with a cosmological constant. According to [1], one of the major problems of the

Another fundamental problem with

The second major assumption of the standard model is that the matter distribution of the early universe was uniform. The most striking feature of the universe we observe is its fantastic degree of organization on all scales from stars up to the cosmic web. The standard model asserts that early quantum fluctuations seeded later accretions that became the structures but it is clear that this organization could not have been the result of random events so some organizing principle must have been responsible. Further, we will see that the scale of the largest structures demands that the definition of these structures came about in the absence of causality. The new model solves this problem by asserting that a blueprint that came into existence during an initial Planck era inflation later regulated nucleosynthesis in such a manner as to create all the cosmic structures we now observe.

A third major assumption of the standard model is that all cosmic structures are a consequence of accretion. We will show instead that when vacuum energy is included in Einstein’s equations, accretive structure formation initiated by small perturbations is impossible. In the new model, it was the imprint expressing itself by regulating the creation of matter that was responsible for the structures. We go on to show that after nucleosynthesis, under the influence of initially the expansion and later gravitation, the initial matter distributions became fully formed stars, galaxies, galaxy clusters, and superclusters more or less simultaneously at around

Another major problem with the standard model is that it cannot explain the matter/antimatter asymmetry of the universe which the new model does.

The chapter is a distillation of the results presented in the series of papers listed in the reference section. We ask the reader to refer to those papers for detailed discussions and references to methods and data.

One final point is that this model should be considered as a whole instead of the collection of disjoint pieces which is the case with the standard model. The present-day Hubble constant, the present-day CMB temperature, and one other parameter associated with neutron decay, fix all the parameters of the model. There are no other parameters and, unlike the situation with the standard model, only one evolution is possible.

2. Origins and the Planck era

We will begin with an observation concerning the CMB spectrum that will lead us back to the origins of everything. In spite of the claims of the standard model, superclusters are the only structures in existence large enough to be responsible for the large peaks of the CMB spectrum. In our original paper [3], we argued that superclusters were actually responsible but we have since realized [4] that the process that created the superclusters simultaneously created the CMB spectrum with peaks of the same physical dimensions. We will have more to say about this later but the point here is that superclusters in some form must have been in existence at the time of recombination. We also know that the CMB spectrum peaks were vastly too large to be explained by any causal process. Starting at the time of recombination and moving backward, since nothing much happened between the time of recombination and nucleosynthesis, it is natural to suppose that superclusters and the CMB spectrum originated during nucleosynthesis and, indeed, their material manifestation did originate at that time. Because of causality, however, the blueprint that regulated their creation must have come into existence even earlier. The only possible conclusion is that, even though causality is a cornerstone of physics that applies to most of the history of the universe, there must have been an epoch at the beginning during which it had no meaning. The final jump backward is to a Planck-era inflation.

Imagine that we are interested in measuring the duration of some event. To do so, we need a clock whose ticks are of a shorter duration than that of the event so to measure events of shorter and shorter duration, we must keep subdividing the ticks. We eventually come to a limit which we take to be the Planck time at which our ticks can no longer be subdivided. A consequence is that time becomes uncertain. The same idea holds for distance and energy as well. Since neither time nor distance had any exact meaning, it follows that causality had no meaning so during this epoch the constraints imposed by causality (and entropy) did not exist.

Such inflation by itself, however, does not explain superclusters or anything else so structure is needed. We concluded that large smooth acausal vacuum structures or imprints came into existence with relative dimensions ranging from that of stars and on up to that of superclusters and beyond. Further, we show in Section 18 that the distribution of these imprints was fractal with a dimension of about 1.1. The problem then is to account for the existence of these imprints. Given the extremely short period involved and the lack of causality, it should be clear that no complicated dynamics could have been involved. Having a fractal dimension suggests that self-similarity was in some way responsible.

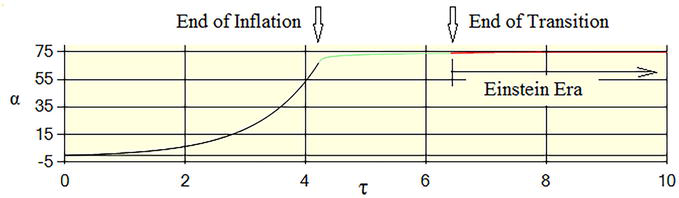

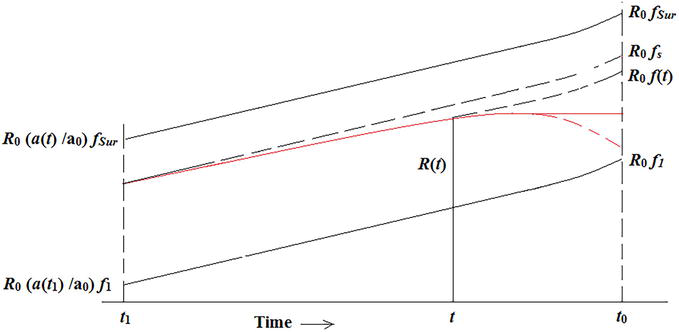

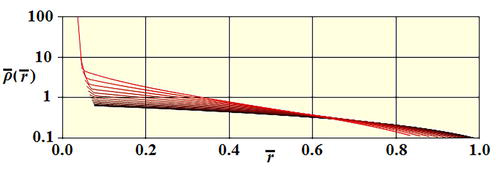

In Figure 1, we summarize the situation. The scaling is defined by

Figure 1.

Initial evolution of the universe.

As we will see in the next section, in the new solution of Einstein’s equations, the scaling of the universe is entirely a consequence of the vacuum and since the model contains no free parameters, we can work backward from the present day to fix the value of the scaling at the end of the transition.

We recognize that there is no direct observational evidence that inflation existed and, in fact, there never can be for the simple reason that there was nothing to observe before nucleosynthesis. The indirect evidence for inflation, on the other hand, is overwhelming given the assumption that the Big Bang expansion began from a Planck-sized beginning. The fact that the new model solution must join onto the scaling at the end of the transition not only constrains the magnitude of the inflation, it also forces the conclusion that there was an inflation. In fact, without the inflation, the present-day size of the universe would be a small fraction of a meter.

Any discussion of a Planck era eventually brings up the point the Planck dimensions are simply dimensional constructs based on the values of certain physical constants and it would be a fantastic coincidence if the actual Planck dimensions were the same as the constructs. However, a possible resolution to this dilemma is achieved if we turn the Planck dimension formulas around giving us the origin of the physical constants, e.g.

3. A new model of the Einstein era

By the end of the transition period, the uncertainties would have become negligible and normal causality would have come into play. The development of the new model, [3], begins with the idea that the universe consists of a sequence of hyperspheres each of which is homogeneous and isotropic (the

We now need to connect these hyperspheres with Einstein’s equations. Given the assumptions of homogenity and istropy, the hyperspheres can have no preferred origin and all their properties are dependent only on time. Our perception of the universe, on the other hand, is concerned with signals, causality, and so on and these are dependent on both time and distance as described by Einstein’s equations. The question, then, is how do we reconcile the equations that describe our perception with a sequence of hyperspheres that have no notion of an origin or distance? The answer is that Einstein’s equations describe the universe from the viewpoint of each observer. But a hypersphere is simply the collection of all possible observer origins so Einstein’s equations become the equations that describe the hypersphere when evaluated at

In the standard model, the additional assumption is made that not only are the hyperspheres homogeneous and isotropic but that the universe must

Putting these ideas together, the metric becomes,

The second part of the new model concerns the vacuum energy density. Instead of the standard model concept of a vacuum described by

After working out Einstein’s equations and taking the limit as

where

(Ref. [3] for the omitted definitions). We see that the scaling is power-law for

which is related to the vacuum energy density and pressure by

The sum is thus a fixed function of time,

Note that there is no direct relationship between the scaling and the energy density or pressure. The present-day acceleration of the scaling is, in fact, a kinematic constraint that follows directly from the time variation of the curvature and the presence of the vacuum energy in the energy-momentum tensor. It has nothing to do with a cosmological constant or so-called dark energy. This situation is completely different from that of the standard model based on the FRW solution of Einstein’s equations. The latter does not actually predict anything based solely on being a solution to the equations. By making choices about various parameters, it is possible to predict any sort of evolution one cares to see. In the new model that is not the case. There is one solution and

Both the energy density and the pressure contain a constant term that one might think to associate with a cosmological constant. If we must assign a meaning to these constants, they are the values of the vacuum energy and pressure at infinite time. These, however, have no physical consequences and they can be removed by simply adding a constant term to the energy-momentum tensor. Physical quantities such as the curvature are functions of the sum rather than either energy or pressure individually and in the sum, the constant terms cancel.

Aside from the present-day size and age of the universe, we need the value of the scaling at two different times to fix the parameters of the model. For one, we use a present-day value of the Hubble constant. There is some uncertainty about its value but, as we will show later, a consensus of

There appears to be one remaining parameter, namely

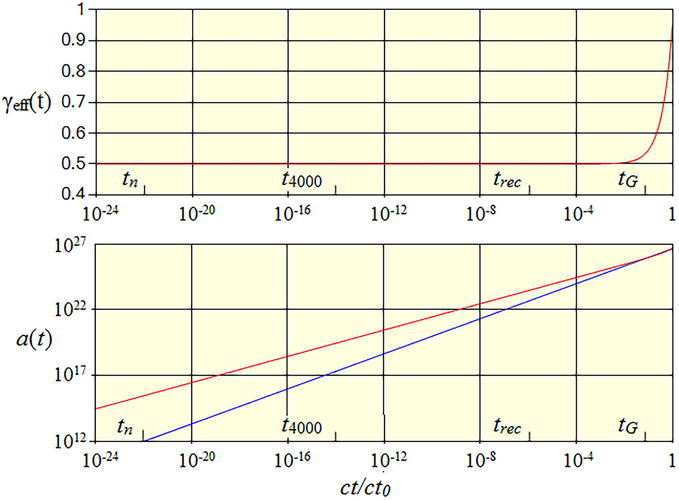

Everything is now fixed and unambiguous predictions can be made. We define an effective scaling parameter by

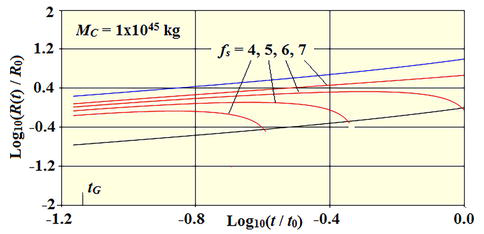

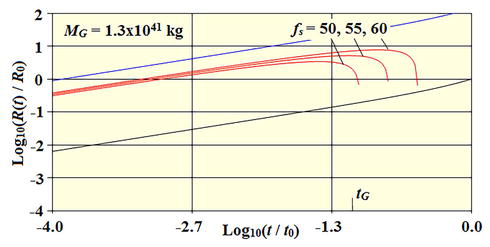

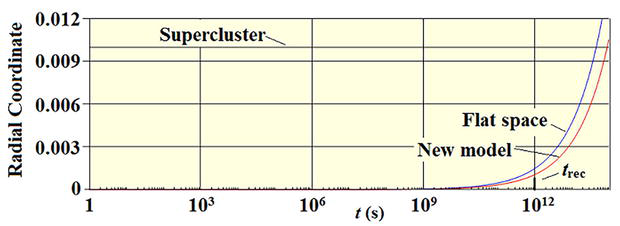

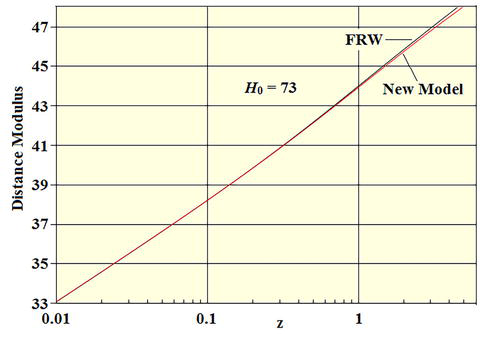

Figure 2.

The time-varying curvature predictions are shown in red. For comparison, the curve for

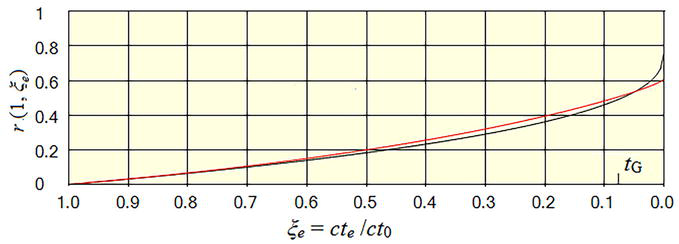

In Figure 3, we show the coordinate distance of sources whose signals are received at the present plotted as a function of the lookback time. Both time-varying and constant curvature cases are shown. The two curves are similar for small values of look-back time but they differ considerably for large redshifts which illustrates the point we made earlier about the universe not appearing homogeneous and isotropic. In particular, with time-varying curvature, there is a fundamental limitation on our ability to detect distance sources. No matter how far back in time we look, we cannot see sources with coordinate distances greater than about

Figure 3.

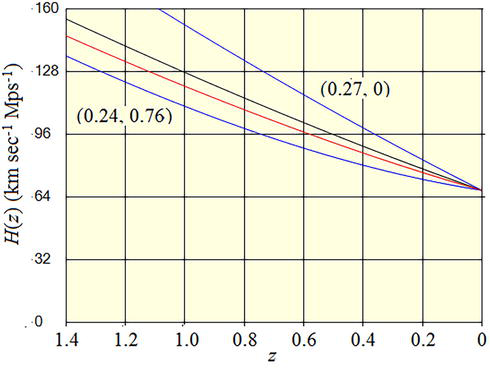

The redshift dependence of the Hubble parameter,

Figure 4.

Hubble parameter vs. redshift. Time-varying curvature in red, constant curvature in black. The FRW results for two values of the densities are shown in blue. The latter were normalized to the value of the new model curve at

Finally, the model makes a prediction of the luminosity distances of various sources that agree with observation but we will postpone that discussion until we reach the section concerned with the Hubble tension problem.

Up to this point, we have only considered the vacuum but eventually, particles permeated space so we need to consider their interaction with the vacuum energy density. On the LHS of Einstein’s equations, the connection coefficients depend only on the metric components and these have no dependence on either the vacuum energy density or pressure. On the RHS, since the particles can be assumed to be at rest, their density is simply added to the vacuum energy density. Ordinarily, after including a new term, we would need to re-solve the equations but in this case, the equations have not changed since the particle density just becomes part of the vacuum energy sum so the original solution still holds. This means that any small variation in the particle density will be immediately canceled by the corresponding variation in the vacuum needed to keep the sum unchanged. Since the gravitational field does not change, nearby matter will not detect any difference in the field so no accretion occurs. When the vacuum energy is included, Einstein’s equations show that

We can now address the “coincidence” problem mentioned in the introduction. From (Eq. (7)), we find that the present-day vacuum energy density sum is

which differs from the value of the so-called dark energy density (

4. Nucleosynthesis

The next step is to account for the creation of ordinary matter. We begin by separating what is known from what is conjecture. Observations of galaxies allow the relative abundances of the light elements to be measured. Working backward in time, the abundances at the end of nucleosynthesis can then be estimated because the processes that occurred during the intervening period are known. Similarly, the nucleosynthesis reactions are also known so one can work backward again to discover what densities of protons and neutrons were necessary to account for the abundances of the light elements. We can also establish that the process began at a time of about

Here, we will propose a much simpler model, [3, 4] that leads to the same nucleosynthesis starting point but which also accounts for the matter/antimatter asymmetry of the universe, the existence of the CMB, and the high density of neutrinos needed to establish the pre- nucleosynthesis proton/neutron abundance ratio. We start with the idea that there was no existence other than the vacuum prior to the time of nucleosynthesis and that at that time, a very small percentage of the vacuum energy was converted into neutron/antineutron pairs with

Initially, we have neutrons and antineutrons but we also need protons. Our original idea was that weak interactions were responsible for the existence of the protons but that turned out to be wrong. The weak interactions did play a critical role but they were not responsible for the original population of protons. Instead, the protons and antiprotons were a consequence of baryonic charge exchange reactions, [4].

Starting with the neutrons and antineutrons, particle/antiparticle annihilation and charge exchange reactions proceeded at a very high rate creating, in addition to protons and antiprotons, a very high density of mesons, neutrinos and electrons along with the radiation that became the CMB. Ultimately, the small excess of initial neutrons resulted in the matter/antimatter asymmetry of the universe. This entire process was very fast and was completed

The key point about this process is that it was not random. Instead, it was regulated by the Planck era imprint that we spoke of earlier. This imprint caused an increase relative to the average in the number of neutrons and antineutrons in the regions that became the cosmic structures and a decrease in the regions that became the voids.

For this to work, there must have been a universal violation of the baryon conservation law because the same excess had to have occurred everywhere and so cannot be the result of some random process. In fact, to account for the matter/antimatter asymmetry, some such violation must be introduced no matter what creation model is assumed. Indications of such a violation in connection with neutrons have been known for a long time. The evidence, known as the neutron enigma, comes from experiments conducted to determine the half-life of the neutron. The problem is that different values result depending on the design of the experiment, (see reference in [3]). Experiments that detect the protons that result from the neutron decay give one value and experiments that count the total number of neutrons remaining in a container as a function of time gives another value. The difference is evidence that some of the neutrons simply disappear without leaving behind any daughter particles.

Referring now to the problems listed in [1], there are several that can now be settled. There are several supposed anomalies with the CMB spectrum that are again artifacts of the

We will discuss this initial stage of nucleosynthesis more fully in a later section but to appreciate the details of this process, we first need to examine the subsequent development of cosmic structures.

5. The origin of cosmic structures

Skipping over the details of nucleosynthesis proper, we will now consider the problem of how the proton gas that resulted from nucleosynthesis became the structures we now observe. The filament structure that defines superclusters was fixed by the nucleosynthesis process discussed above and because the filaments are vastly too large to undergo gravitational evolution, they are much the same today as they were initially. The components that make up the filaments such as galaxy clusters, on the other hand, did evolve.

To study the initial evolution of all smaller cosmic structures, we consider the motion of a particle lying at the outer edge of a volume containing the mass that eventually became the structure in question, [5]. The only accelerations acting on the particle were gravitation and the acceleration due to the expansion of the universe so the equation of motion of the particle becomes

The coordinate

is the effective mass of the structure adjusted for the presence of the background particle density.

The coordinates are illustrated in Figure 5.

Figure 5.

Coordinate system for structure evolution.

If we consider a particle located at the radius of the volume needed to form any structure from the background density, nothing would happen because the density would be the same everywhere. The corresponding multiplier is

We will first consider the evolution of a galaxy cluster. In all cases, we imagine that the particles were created at rest so their initial recession velocity was fixed by the expansion. According to the accretion model of structure formation, structures could not come into existence until there was something to accrete which we take to mean that the starting time would be the generally accepted time of galaxy formation. In Figure 6, we show the model results for several values of initial over-density. The blue line is the solution for

Figure 6.

Solution for four values of the starting position with

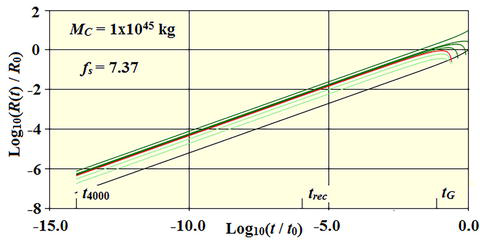

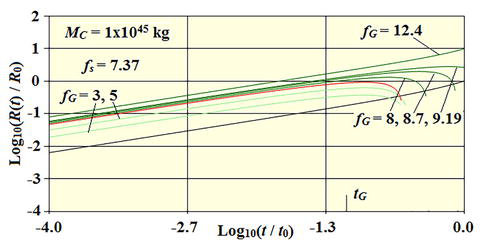

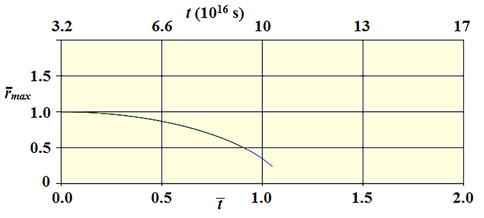

Since we have argued earlier that all structures came into existence at the time of nucleosynthesis with more or less their final mass, we recalculate the evolution beginning at the time that nucleosynthesis proper ended or about

Figure 7.

Solution with

Figure 8.

Detail view of

The value

6. Galaxy evolution

We will now apply the same model to galaxies, [5]. The procedure is the same as for clusters. Taking the Milky Way as our example, the outer surface parameter is

Figure 9.

Galaxy evolution for three values of

A value of

At this point, we emphasize that in this new model of structure formation, all structures came into existence at roughly the same time with essentially all their present-day mass. Clusters had most of their complement of galaxies, and galaxies had a good supply of stars. This result is completely at odds with what has been expected based on the accretion model of galaxy formation. In the latter model, the earliest galaxies would have be fragmentary rather than fully formed.

7. Stellar evolution

We will next move on to the stars, [5]. Although stars are extremely dense compared to everything else, that density is a consequence of their collapse. At the time of nucleosynthesis, their imprint density was only slightly larger than that of their host galaxies. For our model, we assume an initial nominal dimension of

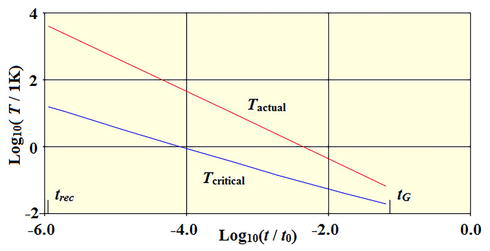

According to Jean’s model of star formation, for a star to form, the sum of the potential and kinetic energies must be less than zero which results in a critical temperature of

where R is the radius of the cloud at the ZVP. Before

Figure 10.

Comparison of the actual and critical temperatures.

We find that the gas temperature did not reach the critical value until about

8. Galaxy and Galaxty cluster stability

We have now established that, aside from superclusters, all structures reached their ZVPs at approximately the same time and with essentially all their present-day mass. The problem is that, without some process to prevent it, both galaxies and galaxy clusters would have undergone the gravitational free-fall collapse indicated in the figures. (The stars did undergo collapse so we are not concerned about them.). The reason that galaxies and clusters did not collapse was that their constituent gas was rapidly heated very soon after they reached their ZVPs, [7].

To model the dynamics, we used a modified form of the usual hydrodynamic equations. We assumed spherical symmetry and adopted the Lagrangian viewpoint. The final equations in terms of dimensionless coordinates are as follows;

We have defined a dimensionless time,

where

and have defined an adjusted density by

The independent spatial coordinate,

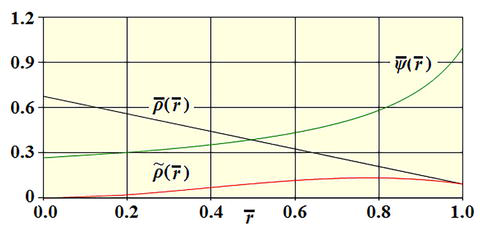

We note that these equations do not contain any dimensionless structure-dependent parameters so the solutions apply to both galaxies and clusters. Initially, the gas had a temperature of at most a few K, and because we are starting at the ZVP,

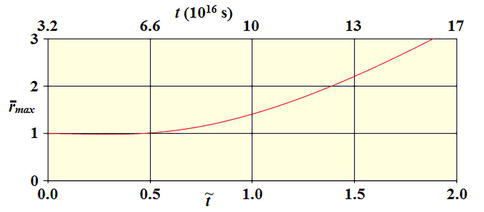

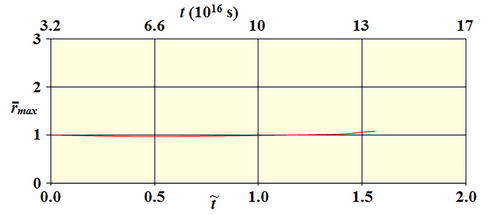

We will first consider the evolution of clusters. To establish the time scale of our problem, in Figure 11 we show the evolution of a cluster in the absence of any heating and find that the stabilization of the clusters must occur very soon after the ZVP.

Figure 11.

Cluster free-fall collapse.

We have two problems to solve. The first is to determine what combinations of the initial density and radiation result in a cluster that neither collapses nor evaporates. The second problem is to identify the source of the radiation. We soon discovered that with a uniform initial density profile, clusters would have collapsed no matter what temperature profile is assumed. The same is also true if the profile is too sharply peaked at the origin. We tried several initial density profiles and determined that the profile must have a moderate negative slope. An example is the curve labeled

Figure 12.

Linear density profile with negative slope.

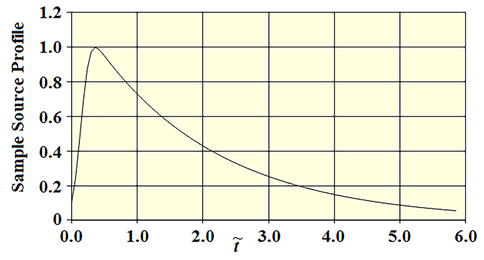

We next consider the heating. We very quickly determined that compressive heating develops far too slowly to prevent the collapse which means that a source of radiation must have been responsible. We thus needed to assume a radiation source profile and our first try was the broad profile shown in Figure 13. The sample profile has a maximum value of unity which is then scaled by a multiplier in the simulation code. With a multiplier of five, the resulting size of the cluster is shown in Figure 14. What we find is that while a significant amount of radiation is necessary to prevent the collapse, it must also be short-lived because otherwise, the cluster would have evaporated.

Figure 13.

Broad radiation profile.

Figure 14.

Solution with the density and radiation profiles of

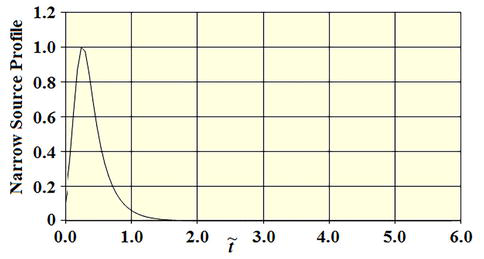

For our next try, we assumed the narrow profile shown in Figure 15 and obtained the evolution shown in Figure 16. (Narrow is a relative term, the width of the peak still amounts to

Figure 15.

Narrow radiation profile.

Figure 16.

Solution with narrow radiation profile and a multiplier of 6.

The actual peak radiation intensity is given by

The final problem is to identify the source of this radiation. We need a huge power output and even more importantly, a significant lifespan and active galactic cores, or, in other words, quasars are the only real possibility. Power outputs from large quasars are on the order of

To have quasars, however, we must have supermassive black holes. We discovered earlier that galaxies would have undergone gravitational free-fall collapse soon after reaching their ZVP unless something prevented that from happening. The galaxies reached their ZVP with sizes several times larger than their present-day size and soon after did begin to collapse. Initially, the gas making up the galaxy was cold and, as was the case of the clusters, compressive heating would not have stopped the collapse. We now assert that during this contraction, all galaxies formed supermassive black holes together with accretion disks. In Figure 17, we show a calculated sequence of density profiles showing the initial phase of the collapse. We find that the density increases extremely rapidly at the origin creating the conditions necessary for the formation of a black hole and that it does so long before there is any significant reduction in the radius of the galaxy.

Figure 17.

Density profiles during the initial phase of galaxy collapse.

As the collapse continued, an accretion disk must have formed and the resulting radiation heated the galactic gas thereby stopping the collapse. The key point is that

Much of that radiation would have escaped the galaxy and in the cases in which the galaxies were located inside a cluster, they would have heated the cluster gas. The Virgo cluster contains a large number of galaxies, most of which are dwarf ellipticals. References listed in [7] found that first, quasar host galaxies are all ETGs with the bulk being ellipticals and second, that dwarf ellipticals do have active nuclei. The Virgo cluster does not contain a quasar at present but it is a characteristic of active nuclei that they radiate huge amounts of energy until their supply of accretion material runs out after which they become normal galaxies. The conclusion is that clusters were heated from within by a large number of mini-quasars that collectively used up their accretion supplies within the time scale indicated in Figure 15.

9. Nucleosynthesis again

We will now return to the nucleosynthesis pair production process that we introduced earlier. To study the evolution, we created a simulation model that included neutrons, protons, electrons, neutrinos, photons, and their antiparticles. We then solved the coupled reaction rate equations to determine the evolution, [4].

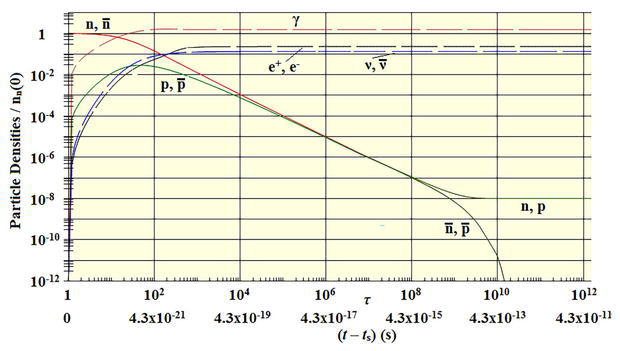

As we discussed earlier, at a time of about

(We have indicated the final states of the annihilation reactions but these actually pass through an intermediate meson state that eventually decays into the photons and leptons.) In Figure 18, we show the results for an initial density of

Figure 18.

Initial stage of nucleosynthesis.

The simulation was primarily concerned with the baryon annihilations and the baryon curves are correct. The details of the product particle distributions, on the other hand, were of only secondary interest at the time and because we needed to keep the simulation manageable, we did not take proper account of the meson state branching ratios or the meson lifetimes which is the reason that the leptons and photon curves are shown dashed.

The annihilation decay products are primarily pions. Neutral pions decay into two photons with a relatively short mean lifetime so labeling the curve as photons is a fair representation of the actual situation. Charged pions, on the other hand, decay into muons which subsequently decay into either electrons or positrons plus neutrinos with a combined mean lifetime much larger than the time scale of the figure. That being the case, the curves labeled

One of our assumptions was that this process began at the point when the vacuum energy density equaled the equivalent mass energy of a neutron. Using a value of

The number density of photons produced was on the order of

The number density of neutrinos/antineutrinos was also large,

10. CMB anisotropy

We have just seen that the model can account for the existence of radiation with a temperature corresponding to that of the CMB. To understand the anisotropy, we recall that departures of the matter densities from the background value were necessary to account for the development of all cosmic structures. For example, the Virgo cluster density excess was 1.35 and that of the Milky Way was 17. To achieve those excesses, the vacuum pair production rate must have been corresponding larger by the same factor. Conversely, in the void regions, it must have been smaller. But the number of produced photons varies in direct proportion to the initial particle density as does the total energy of the radiation. Since the temperature of black-body radiation varies as

We find then that

Referring back to the previous section, the simulation was carried forward to the point that the baryonic annihilations were complete. Large numbers of photons, electrons, and neutrinos were then in existence, and the counts of the protons and neutrons were equal. The next step is to carry the evolution forward to the start of nucleosynthesis proper. During this period, the spectra of the radiation and particles became black body, the proton/neutron ratio was adjusted to a value of 80/20 by the weak interactions. At the end of the annihilation phase, while the reaction rates between the just created particles, photons, and neutrinos were very high, they were all in equilibrium so no net energy was being transferred. The radiation spectrum, for example, was static. What is missing in the simulation are reactions that transfer energy away from the protons because until those energies are much lower, the weak interactions remained symmetric and no adjustment of the proton/neutron ratio could occur.

To model this phase of the evolution, we must first take into account the annihilation meson branching ratios and the meson mean lifetimes mentioned earlier and then include the known deep inelastic baryon reactions in which one or more mesons are produced. An example is the set of reactions

11. The connection between the CMB and superclusters

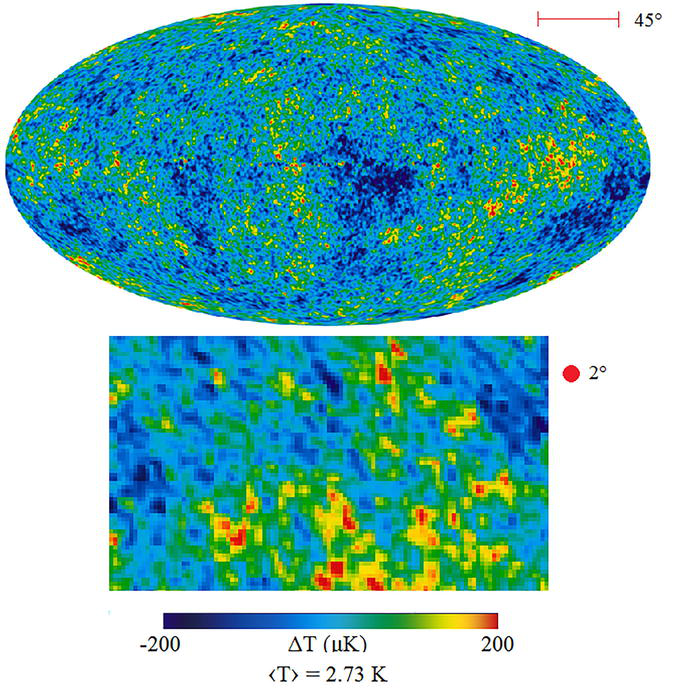

Figure 19 shows the well-known CMB anisotropy map. A portion of the map has been enlarged in the lower rectangle and two angular size references are also included.

Figure 19.

CMB anisotropy.

The CMB we receive was emitted by a spherical shell whose radius is fixed by the coordinate distance of Figure 3 when evaluated at the time of recombination, [3]. Thus,

An important property of the vacuum metric is that there are no off-diagonal components linking time and the angular coordinates which means that the angle between two approaching signals does not change. This being the case, we can move this equation forward to the present day,

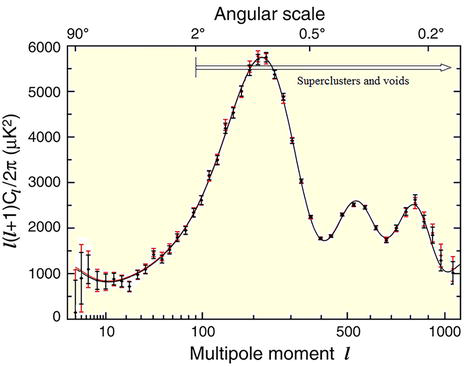

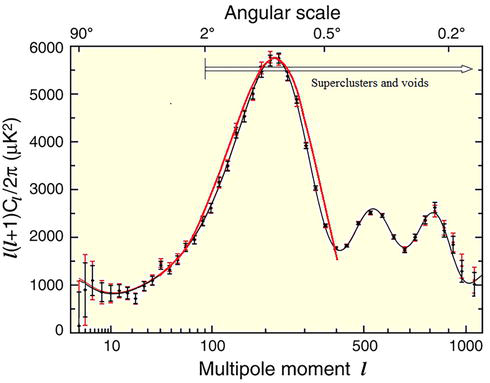

In Figure 20, we see that the first peak of the CMB spectrum has an angular size of about 1 deg.

Figure 20.

CMB spectrum.

In Table 1, we show the angular sizes of various structures and we see that superclusters (and voids) are the only structures large enough to be associated with the peak.

| Object | |

|---|---|

| Milky Way | 0.0001 |

| Groups | 0.007–0.013 |

| Clusters | 0.013–0.065 |

| Superclusters | 0.2–2.0 |

| voids | 0.6–1.6 |

| Extreme structures | > 45 |

Table 1.

In Figure 21, we show a plot of 71 known superclusters and voids. Using the Gaussian distribution shown, we performed a statistical calculation to determine the spectrum with the result shown in Figure 22.

Figure 21.

Count of observed superclusters (red) and voids (blue).

Figure 22.

Ensemble average supercluster/void CMB spectrum. Angles are related to the moment by

We find that the position of the peak is correct. The shape of the predicted peak is slightly broader than the observed peak but that is quite likely due to our assumption of spherical symmetry which is certainly not the case. The magnitude of the peak was adjusted to match the observed peak and is yet not a prediction but we do expect that a prediction will result from our new simulation mentioned previously that is still under development The second peak does not correlate with the size of any structure which is strong evidence that it is a consequence of multipole distributions within the superclusters and voids. Referring back to Figure 19, we see that the supercluster-sized structures have a range of temperatures which supports that idea.

We remind the reader that even though the superclusters have the right size, it wasn’t the superclusters per se that were responsible for the peak but rather that the process that created the superclusters simultaneously created the CMB spectrum peaks with the same dimensions.

12. Baryonic acoustic oscillations: CMB

Since this bears on the CMB anisotropy problem, this is an appropriate point to show that the baryonic acoustic oscillation model is wrong, [8]. We will first discuss the CMB issue and then the unrelated 2-point galaxy correlations model. One of the principal failings of the BAO model is that the authors assumed from the beginning that there existed a uniform distribution of radiation that needed a spectrum. We have shown, however, that that idea is simply wrong. The radiation came into existence with its spectrum in place from the beginning.

The first argument against the BAO model is simply causality. In Figure 23, we show the coordinate of a photon emitted at the time of nucleosynthesis in comparison with the coordinate size of a supercluster which we have shown has the same dimension as the spectrum peak. We see that by the time of recombination, the photon could have only traveled about 10% the distance across the supercluster.

Figure 23.

BAO causality restriction.

The second argument is concerned with the energy required to create the peaks starting from an energy source that emitted acoustic waves at the time of nucleosynthesis. At present, the relative magnitude of the CMB anisotropy is

Also, at that time the average size of a supercluster was

The conclusion is that

13. Baryonic acoustic oscillations: Galaxy correlation function

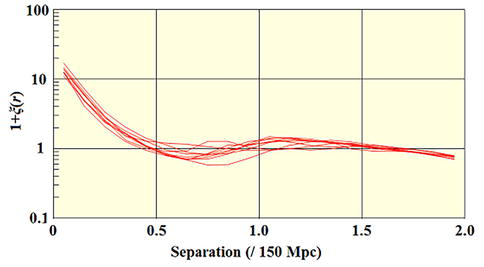

The second phenomenon attributed to BAO is the bump observed in the two-particle galaxy correlation function at a separation of

To test this idea, we built a simulation based on a cubic grid with edge lengths initially set to the average size of a supercluster. Using Gaussian statistics, we randomized the ends of the edges both in magnitude and direction according to the size distribution of superclusters. Next, we assigned a random number of galaxies to each edge with random offsets scaled by the width of a supercluster and finally, calculated the correlation function of the resulting distribution. The set of galaxies along the center grid line formed our reference set. There are a number of estimators described in the literature. The one we used is the spherically symmetric estimator given by the following (see ref. [8]),

In this formula, the number of reference galaxies is

The result of running the simulation for a number of different random number sets is shown in Figure 24. While the model is perhaps not an accurate representation of the cosmic web, the result looks very much like the observed correlation function in shape and the peak appears at the correct distance.

Figure 24.

Two-particle galaxy correlation function.

During the past two decades, a lot of effort has been spent on the galaxy correlation problem because of its potential as a standard candle for measuring distances at redshifts beyond the supernovae scale. What we have just shown is that the idea is perfectly reasonable because the coordinate dimensions of the cosmic web do not change. In all the work that has been done, one only needs to replace the wording” baryonic acoustic oscillation” with” cosmic web.”

Taken together, we have shown that

14. The lithium problem

We noticed as part of our original development, [3], that certain reactions were not included in the standard BBN code and that when one in particular,

The second part of the puzzle is that the observations of the element abundances are not all taken from a single location. For example, deuterium abundances have been determined from line-of-sight observations out to distances of 100 parsecs with a result of

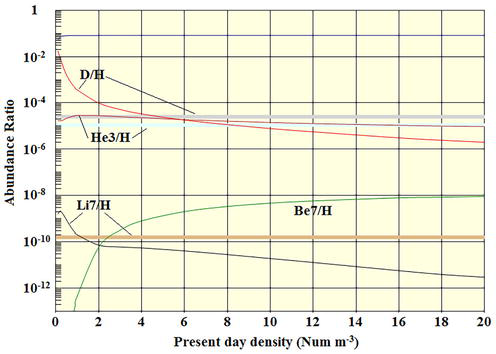

Figure 25.

Nucleosynthesis abundances as a function of the initial baryon density.

(The curves in this figure differ somewhat from those shown in [4]. The reason for this are first, that a value of

From this figure, we see that The

Considering now the deuterion and lithium abundances, we see that an initial baryon density of about five is necessary to match the accepted deuterium abundance but that a density of about 1 is necessary to account for the

We find that the new model with density variations can readily account for the observed abundances although at this point, we are not able to accurately predict the detailed initial density distribution of the Milky Way to be. The lithium problem is not a problem at all.

Aside from not yet being able to predict with any accuracy the initial density profile of the matter distribution of structures to be, an additional significant issue is that many of the needed reaction cross sections are not known with great accuracy. Most of the needed low energy cross sections were measured many decades ago and soon after, experimentalists moved on to higher energies. Our simulations show that choosing one data set over another can result in significant changes to the abundance curves. Our runs, however, do show that the general pattern shown in the figure remains the same. There have not been any recent efforts made to improve the situation and additional experiments are needed to fill in the gaps and resolve differences between existing cross section determinations.

15. Rotation

A long-standing although generally neglected problem in astrophysics is the source of rotation of cosmic structures. All galaxies and some galaxy clusters rotate and even though galaxies are independent entities, their rotations are not random as is evidenced by the Tully-Fisher relationship. We spoke at the beginning about the high degree of organization that exists in the universe and the rotation of galaxies is an example of that.

The standard model idea is that the infalling material during the accretion phase of galaxy development somehow managed to start the rotation. That, however, is a highly unlikely scenario because random events do not result in organized development and they certainly could not explain the correlation between the rotation rates of different galaxies.

If we assume that the rotation was initiated at the time of nucleosynthesis and that the rotation was a matter of material undergoing normal orbital motion, the rotation would have slowed as the universe expanded. But given the present-day rotation rates and working backward, we find that the initial velocities would have been orders of magnitude greater than the speed of light which is impossible. Another problem with the accretion idea is that, as we have seen, the outer regions of developing structures had no knowledge of the center until very late in their evolution which means that organizing the material into normal orbital motion could not have begun at all until sometime after the structure had evolved beyond the causality limit. By that time, however, no process could have then started the entire structure rotating.

All this indicates that the rotation is primordial. Since the rotation cannot be explained in terms of material motion, the only other possibility is that the vacuum imprint was rotating and the matter and antimatter created by the vacuum were simply carried along by the rotating vacuum. This brings us to the reality of dark matter.

16. Dark matter

Dark matter was originally proposed to explain the motion of galaxies in galaxy clusters and later to explain the velocity distribution of the stars in spiral galaxies. Since then, it has become something of a catch-all to fix up the calculations of various cosmic phenomena that seem to defy a simple explanation.

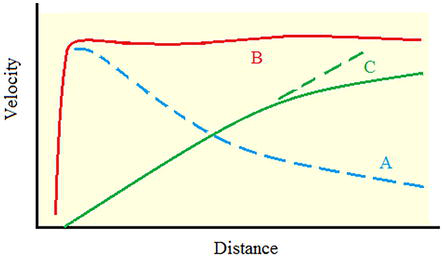

One of the manifestations of dark matter concerns the velocity distribution of stars in spiral galaxies and the gas making up HI rings, [3, 6]. The spiral galaxy problem is illustrated by the curves in Figure 26.

Figure 26.

Typical spiral galactic velocity distribution.

The problem is that the observed distribution, curve B does not match the expected distribution, curve A. The generally accepted solution for this problem is to suppose there is a halo of dark

Turning to Einstein’s equations, it is reasonable to model spiral galaxies using a stationary axisymmetric metric,

with an energy-momentum tensor of the form

A small volume of the vacuum will respond to the total gravitation field in the same way as a material particle which means that we can analyze its motion using the usual geodesic equations. The first two of these are satisfied identically as a consequence of our assumption of a stationary metric. The remaining two have the solution

where

We now consider the stars whose velocity must also satisfy the geodetic equations. We separate their motion into a component that is at rest in the vacuum, and hence without angular momentum, and a residual with normal orbital motion,

which exhibits the behavior shown in Figure 26. On the left is the normal orbital rotation, curve A, the first term on the right is the constant velocity component, curve B, and the second is the rigid body vacuum rotation, curve C. At the outer edge of the galaxy, the constant stellar velocity

This result dovetails quite nicely with the rotation model discussed in the previous section. In that case, the vacuum rotation carried along the material and, in this case, the material appears to drag along the vacuum but the result is the same. The vacuum rotation is a consequence of the Planck era imprint but it is likely that the residual orbital motion did not begin until after the ZVPs were reached and the galaxies began to collapse.

Another problem mentioned in [1] is known as the “angular momentum catastrophe.” The idea behind this thoery is that the development of disk galaxies, and in particular their sizes, can be understood in terms of their angular momenta [9]. The problem is that the theory does not work. The predicted sizes are an order of magnitude too small. There are a number of assumptions made that we do not accept such as the idea that their rotations are a consequence of tidal torques between neighboring galaxies instead of being primordial. The reason for that is that gravitation had no effect on the evolution of galaxies until very shortly before they began their initial collapse and certainly could not have exerted enough torque on neighboring galaxies to start them rotating within the short time interval prior to their ZVP. All galaxies rotate and it is highly unlikely that this mechanism could account for their rotations considering the variations in their separations and orientations. The reason for putting these comments in this section, however, concerns the assumption that the angular momentum of the stars increases with distance from the center of the galaxy. In this new model the exact opposite is true. The outer stars in a disk are carried along by the rotation of the vacuum and do not have any angular momentum at all.

In the references, we discuss other cases in which vacuum energy is shown to account for phenomena attributed to dark matter. Summing up,

17. Hubble tension

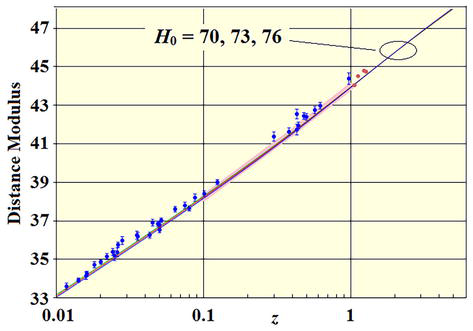

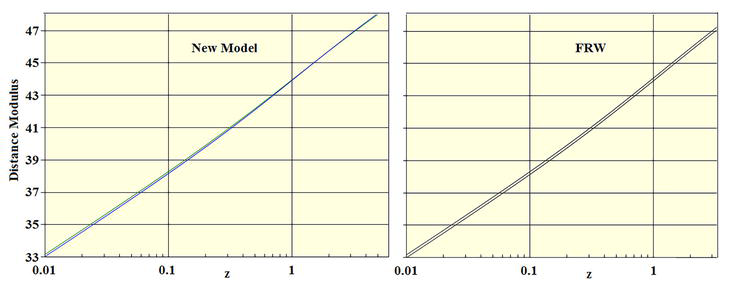

During the past two decades or so, a lot of effort has gone into the problem of pinning down the value of the Hubble constant. The various methods can be grouped according to the redshift range involved. First are those based on observations in the range from about 0.01 to an upper limit of 6 or so. The significance of the lower limit is that it is the smallest redshift for which the recession velocities of galaxies have a reasonable probability of being significantly larger than their peculiar velocities. The upper limit just represents the time of galaxy formation. The Hubble constant determined by methods covering this range is settling on a value of

In Figure 27, we show new model luminosity distance modulus predictions for 3 values of the Hubble constant. As we see, there is only a very slight dependence on the Hubble constant. It is important to appreciate that the new model has no other adjustable parameters and that varying the Hubble constant changes the result hardly at all so a failure to fit the data would be a real problem for the model. It appears that the model predictions lie a little below the data points but the figure shows only a very limited number of the total number of data points now in existence. When the new points are included, the new model fit is extremely good.

Figure 27.

Luminosity distance predictions of the new model. Refer to [

We now compare with the

Figure 28.

Model and FRW predictions for

The Riess SHOES group finds a value of

Figure 29.

New model (red) and FRW (black) predictions for

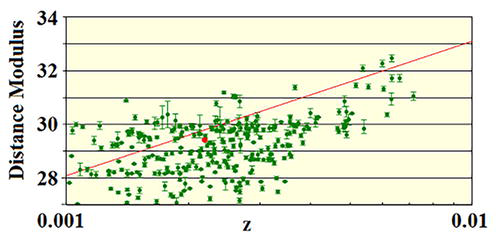

The second grouping of methods covers the redshift range from 0.01 down to some lower cutoff which we will take to be 0.001. The overriding factor in this redshift range is that the galactic pecular velocities are as large or larger than the recession velocities. The best-known results covering this redshift range are those of the Tip of the Red Giant Branch (TRGB) group. They claim that their data results in a Hubble constant of

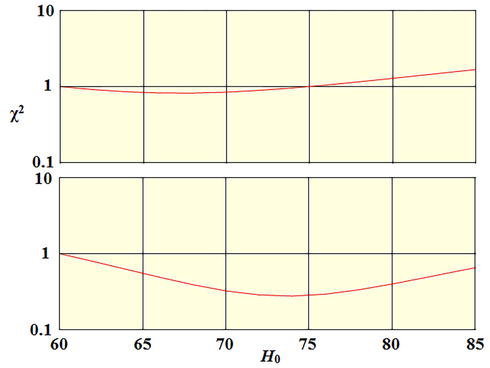

In [8], we did a fit using

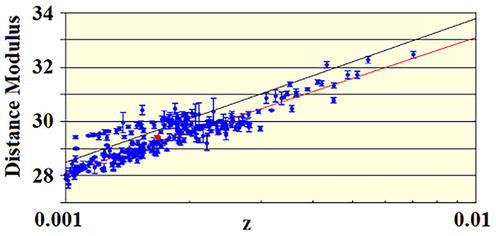

In Figure 30, we show the raw data points and in Figure 31, the same set corrected for the peculiar velocities using the probabilistic model. The red line is the new model prediction. The difference between the two distributions makes it obvious that the peculiar velocities are large so any prediction made is highly dependent on the accuracy of the correction.

Figure 30.

TRGB raw data points.

Figure 31.

TRGB data points adjusted for peculiar velocities.

If the model were completely accurate, all the adjusted data points would lie along a single line. Since they do not, there is obviously a problem. We note that the new model prediction gives a good fit to what appears to be the correctly adjusted galaxies. In Figure 32, we show normalized

Figure 32.

The third redshift grouping, commonly referred to as cosmological, groups together those methods covering redshifts greater than that of galaxy formation. The BAO model of the CMB spectrum falls under this heading and it is the only such model making a Hubble constant prediction. The consensus value is

There are other approaches such as the Megamaser and

There is one further approach that has the potential for becoming a viable method for determining the Hubble constant. The idea is to measure the rate of recession of bodies within the solar system. At present, there is considerable interest in Moon-Earth distance because 70 years of accurate distance measurements already exist. Calculating the Hubble flow contribution to the total recession rate is very simple and the result shows that the rate is well within a range that can be measured. The difficulty comes from the fact that all the other contributors to the Moon’s recession, in particular the tidal effects, must be determined with an combined error of no more than 1 or 2 percent before a Hubble determination can be made.

Planetary recession offers other opportunities. Instead of the 3.8 cm per year recession of the Moon, the rates would be 10 meters per year or more depending on the planet. Measuring the distance becomes more difficult but, on the plus side, the planets are not as subject to the large tidal effects that make the Moon determination difficult.

Summing up, of the two principal contributors to the tension, one is just wrong and the other did not include the entire data set or properly make allowances for errors in the peculiar velocity prediction model. When the spurious values are removed, the results yield the same value as that of the larger z studies. The conclusion is that

18. Tying things together

We have shown that accretion initiated by small fluctuations in an otherwise uniform distribution of ordinary matter is impossible. We also showed that gravitation was ineffective until shortly before

This, however, leaves us with the question of what organized the imprint. It could not have been some random process so there must have been some rule that regulated its development. We know that causality played no part in the development and that whatever the rule was, it must have been extremely simple. In terms relative to the size of the expanding universe, the imprint structures ranged from the size of a star up to the size of superclusters with the latter being nearly as large as the universe at the end of the inflation and the beginning of the transition. The energy densities of these structures, on the other hand, were on the order of

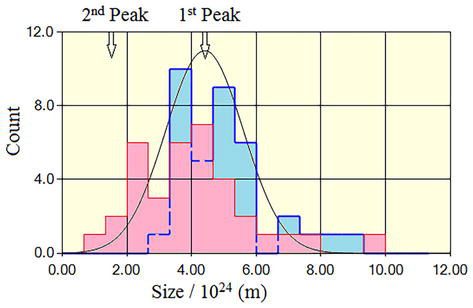

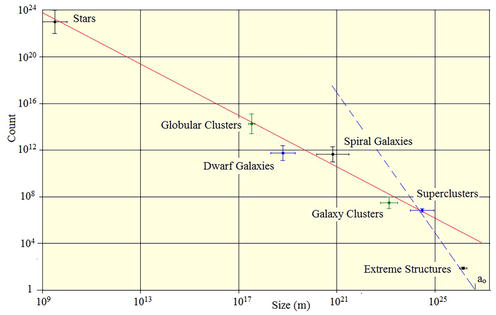

In Figure 33, we show a plot of the count of cosmic structures versus their size, [3].

Figure 33.

Count of structures vs. size.

What is remarkable is that aside from the extreme structures, all cosmic structures with their vast differences in size and numbers lie on a power-law curve and this holds all the way down to the stars. The extreme structures (size

where we have scaled by the average size of a supercluster. The dashed line shows the count of structures of a given size that would fill the universe and its formula is

We see that superclusters fall on both lines so, in an order of magnitude sense, they fill all space.

The significant factor in the second formula is the power of three. The idea of a fractal dimension extends this concept to situations in which the power can have any value, not just an integer but this is exactly the form of (Eq. (32)) so we find that the structure imprint had a fractal dimension of about 1.1. It is generally true that the fractal dimension of any system is larger than the geometric dimension of that same structure so it follows that the cosmic structure must be 1-dimensional or in other words, that the

A defining property of fractal structures is self-similarity and this suggests that self-similarity was in some way the defining principle that regulated the formation of the imprint.

19. Conclusions

We have summarized the results contained in a series of papers published during the past 3 years. A new model of cosmology based on a universe with time-varying curvature is presented that makes a large number of predictions that agree with observation. This model includes a new model of nucleosynthesis that accounts for the existence of all cosmic structures and the CMB, the origin of galactic supermassive black holes, and the stability of galaxy clusters. It also explains the existence of very large galaxies at a time at which the standard model says they could not yet exist.

We have shown that the problems with the standard model boil down to three incorrect assumptions and one omission; the first incorrect assumption is that the curvature of spacetime is constant, the second is that the initial distribution of matter was uniform, and the third is that structure formation was a results of accretion. When those assumptions are abandoned and vacuum energy is included in the energy-momentum tensor, everything falls into place.

Here at the end, we are back at the beginning with two problems to solve. The first is to understand the evolution of the Planck era inflation. We have no clear idea of how the fractal imprint came into existence but we have demonstrated that it was responsible for the universe we observe. The second problem is to understand the breaking of the baryon conservation law that results in the creation of the very small percentage of extra neutrons at the time of nucleosynthesis.

References

- 1.

Perivolaropoulos L, Skara F.. Challenges for ΛCDM: An update. arXiv:2105.05208v3. 6 Apr 2022 - 2.

Abdalla E, et al. Cosmology intertwiined: A review of the particle physics, astrophysics, and cosmology associated with the cosmological tensions and anomalies. arXiv:2203.06142vf1. 2022 - 3.

Botke JC. A different cosmology - thoughts from outside the box. Journal of High Energy Physics Gravitation and Cosmology. July 2020; 6 (3):473-566 - 4.

Botke JC. The origin of cosmic structures part 4 – nucleosynthesis. Journal of High Energy Physics, Gravitation and Cosmology. 2022; 8 (3):768-799 - 5.

Botke JC. The origin of cosmic structures part 1 - stars to superclusters. Journal of High Energy Physics, Gravitation and Cosmology. 2021; 7 (4):1373-1409 - 6.

Botke JC. The origin of cosmic structures Part 2 - HI rings. Journal of High Energy Physics, Gravitation and Cosmology. 2021; 7 (4):1410-1424 - 7.

Botke JC. The origin of cosmic structures Part 3 - Supermassive black holes and galaxy cluster evolution. Journal of High Energy Physics, Gravitation and Cosmology. 2022; 8 (2):345-371 - 8.

Botke JC. The origin of cosmic structures Part 5 - Resolution of the hubble tension problem. Journal of High Energy Physics, Gravitation and Cosmology. 2023; 9 (1):60-82 - 9.

van den Bosch F, C, Burkert A, Swaters RA. The angular momentum content of Dwarf galaxies: New challenges for the theory of galaxy formation. Monthly Notices of the Royal Astronomical Society. 2001; 326 (3):1205-1215