Abstract

Despite a lot of research, the actual methodology of how the vertebrate retina encodes the final color experience from its 3 color-sensitive sensory cells in such a way that it allows us to experience the world as we see it through the visual brain centers is still far from completely clear. Two phenomena in particular have shaped our research in recent years: the first was that, despite complex technical filter chains, the colors we see never looked the way we see them, and the second was the phenomenon that we experience the world more vividly, as it appears in photographs. The latest generation of cell phone images today show quasi-plastic scenes, but we only have two eyes that create this impression independently of each other. But that can only mean that the retina processes images differently than we have previously technically implemented. The following paper therefore discusses a new image processing chain that leads to “eye-like” images, without complex filter architectures, by one eye only and process in a bionic way at the first layer of picture analysis, the retina.

Keywords

- bionic models of retinal information processing

- color restauration

- robotic vision control

- opponent color theory

- 3-compartment model for On–Off-pathways

1. Introduction

Bionic applications mean at first that some preconditions have to been taken into account, these are:

Nature cannot count, means do not know numbers at all.

Nature tries to minimize information to find a solution as fast as possible

Nature systems in living systems can be divided in two structural components: genetic structured subparts, unable to “learn” and adaptive structured subparts, enabling an individuum to adapt in its habitat as good as possible.

Information processing in an individuum is done either by a chemical, electrical or a combination of both way

Information will be ruled excitatory or inhibitory information processing or a combination of both

Mostly information processing in living organism is realized by neurons of different types which are surrounded either by other kinds of “helping and supporting” cells or liquid. The information flow and the types of interacting neurons can be organized either in a genetic preconditioned way, or interacting neurons can learn, whereby this adaption to the information flow of the surrounding habitat is defined by a hand of learning rules.

Furthermore, we have learned that neurons are not responding to an input signal with a “on/off signal” but in so called spike trains, which can be interpreted as a kind of “singing” or melody of them, it is this item which empower them to have an almost unlimited amount of information capacity.

And: neurons have a threshold which means, that they only can “sing” if:

the its soma wears a positive potential

all dendritic input information just arrives at the soma in a manner that for a short time a stable potential is realized enabling the “construction” of an information wearing spike train

if the form and structure of this spike train can be understood by the downstream neuronal structures, special if we are taking about genetical preconditions neural structures.

Neurons – even for genetic structures - cannot differentiate to which kind of information sources they are (momentary) connected

Even so here only a rudimentary list of preconditions of bionic principals – means preconditions for imitating nature – are listed here, these are still enough to understand and to simulate the way how vertebrate’s eyes information capture, code and transmit it in a retinotopic to brain.

Next, we have to take or list to formulate some basic assumptions, which will lead to an unconventional tool to realize an artificial algorithm to come a little bit closer to the way nature do visual based information processing, even so we will at least will show that – sorry to say - a lot of still common theories and methods are led to adsurdum.

First let us look at some weak points to common theories/methods how visual processing like nature runs:

Yellow is the counterpart of blue

White is the arithmetic sum of the colors: red blue and green

Why these two theoretical assumptions are weak?

Well, we just have only sensory for red, green and blue and yellow is the color where the contributions of red and green are equal, but that’s one and only one value of 19.000.000 possible colors we can differentiate. Why we can detect otherwise light red yellow colors or light green yellow colors?

And, when white is the arithmetic sum of the red, green and blue, then red = 200, green = 100 and blue = 150 would lead to the same impression of white as if red = 100, green = 150 and blue = 200, what definitely is wrong.

Beneath the questions, why we can without any problems in a lightning independent way contours and colors it has been exact these two “small” contradictions which pushed us some years ago the start again from the beginning to define a more exacter bionic theory how vertebrate’s optical system processes a robust contour- and color-processing.

2. Theoretical part

Let us start simple: As known we have four lightning sensitive receptors: one kind, which most sensitive for red light, one which is most sensitive for green light, one which is most sensitive blue light and one which between the blue and green band detects a kind of white, means a kind of illumination dimension, when we have day vision. Furthermore, we have one kind of white/grew receptor as a kind of illumination sensor when it becomes too dark for the first four types of sensors.

Next, we have to take into account, that these receptors– or their downstream cells - are connected to all kinds of other receptors in the retina, as more or less the nascent eye build connection to every other neuron of the to the different bipolar cells. Bipolar cells are constructed in such a way that Bipolar Cells are structured in such a way that they are realizing a Center-Surround-Architecture in the following way: Horizontal cells - located immediately behind the photoreceptors - collect the activities of one class of photoreceptors and form a mixed potential from these contributions. This forms the surround component, which is supplied to the bipolar cell as the first contribution. The second contribution, which is supplied to the bipolar cells, consists of a mixed potential, which lies in the middle of the surround area and is formed by only a few photoreceptors of one of the other color receptor types. By this architecture the following color pairs of different bipolar cells are formed:

Red-Green

Red-Blue

Green-Blue

This means that the individual primary colors (red, green, and blue) do not exist alone in the bipolar cells as an information unit, but rather only in combinations that modulate the other color, with the color impressions of yellow, purple and turquoise representing exactly the colors where this modulation is zero. In addition, here lies the crux of the opposite color theory. Blue as the opposite color to yellow would assume that the red-green component would be exactly yellow, which is extremely rarely the case. Or in short: we would only be able to see blue sometimes. At this point, common misconception in the literature needs to be corrected, which nis that the opposite color of blue is yellow.. Rather, it is true that on the one hand red and blue and on the other hand green and blue modulate each other, which ultimately leads to the three modulation oaths in the field of the color-coding receptors and another three modulation paths in the area of cones and white sensors. If we put all this together, we come to the fist, the basic building blocks of our theory, shown in Figure 1.

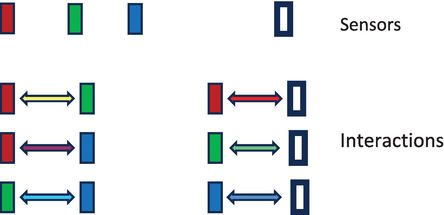

Figure 1.

On top shown is there our four receptors’ types and below the six possible interactions types of these receptors between each other, realized by the first 2 layers of a retina.

Next, we take the mechanism of the opponent color theory for all the possible color-receptor-types into account, means have to explain, in which way all 1.7 million colors and 160 “steps” of white we Can detect (see) are defined.

First this sounds easy, but: nature cannot count, so at least we have only chemical/physical potentials and for the interaction symmetry operations only. And as if that wasn’t difficult enough, such a potential theory has at least to be converted into numbers if we want to use it in computer vision.

Again, we start simple, define a potential by numbers with its lowers value 0 and maximal value 255. (You know that: that’s RGB color theory.) Why we can do so? Now, by taking a look at quantum mechanics we become harmonic oscillators, which only allow certain wavelengths to be stable, and these can only change between it other by a fixed quantum, where in our case this quantum is represented by the integer number 1. You see, sometimes bionic theory is simple.

Now, we have a potential, potential level, which are represented by the different color value of the RGB space, next we need only a symmetry operation, which is near to the opponent color theory [1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13] and must mirror the following items:

Both colors of the three possible opponent color potentials are modulating each other in the following manner:

The largest potentials (the largest value of the two opponent colors) defines the color impression of the resulting color. If no opponent color is present, we see the spectral color (red, green, blue).

In the state of equilibrium of the two opponent colors (when at least none of them is modulating the other one) we get the color, which we call middle-colors. These are the colors (yellow (red-green opponent), purple (red-blue opponent) and turquoise (green-blue opponent). If these colors have the same potential, they form the palette of dark grew to white.

The modulation range of each color and the white information is in a range of [0–255], the modulation cannot lead to negative values and it has to mirror the following.

Before colors occur in nation, individuum can only divide dark and bright. This change rapidly, when more than one “color-sensor” entered the stage. Suddenly several pieces of information competed, but were not allow to leave the just define range [0, 255] and had to contain gray and white in combination. More physically spoken: in the moment two sensor occur in the eye, there were two different potentials which have to interact. Here and only here the next assumption of classical vision theory has to be deleted: Two different information channels, two different potentials can interact, but they can never represent a common average. For all times the are divided, are solitaires, communicating solitaires but, solitaires. And that this is true, we can see, that the different color and white channels kept be divided on the whole information way from eye to the visual cortex until the identifying structures of the pyramidal cells.

Two small items, describing the “tricky point” for the searched symmetry operation., as now a modulating term or better modulation operation have to solve some weak points in common theories, and most important is has also to define without fixed numbers, what means: larger then, equal or lower than an symmetry in color space, or shorter spoken: it should define under every possible lightning and color-conditions an adequate categorization, which is in the retina realized by the ON-Cells, the OFF-Cells and the Equilibrium-Pathway. And these three pathways do not have negative potentials, no inhibitory or excitatory accessory neurons and a (genetic defined) fixed (not be conditioned) structure.

In 2016 we found such a potential oriented symmetry-operation which more or less is described in [14]. To make a long story short: basis of this theory is a three-compartment model of the information processing of the first 2 layers of the retina, which follows the Lie-Algebra, which (again) we can find in quantum mechanics to describe the different stages of a physical system under varying situations, whereby such a symmetry structure is intrinsic to rapidly varying potential- structures.

Band or better relapsed can such a symmetry operation in 12 lines of common c-code, which opens the door to quick robot vision control and a quick picture analysis, rep. a quick color restauration as now have to be discussed in this paper.

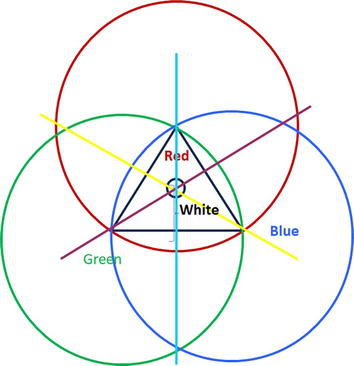

But first, let us summarize what we just discussed in Figure 2. Shown are the potentials of the different colors red, green and blue by a value of 255. This announce the maximum value they can modulate the counter color, which then will be zero. If both colors modulate the other one in the same way, we will get the middle colors (yellow, purple and turquoise), which are modulating themselves also. In the equilibrium states, they are producing the colors white as in all cases: (yellow to blue, green to purple and red to turquoise all 3 potentials contribute to the final color. Please note, that so far, we did not take the effect of saturation into account, neither the pastel colors.

Figure 2.

Potential oriented color model (description see text above).

Also note, that only in the dark triangle the interaction of our three potentials act in the way we can realize colors.

Let us make a short proof, if our potential theory makes sense: We take the middle color yellow. The radius from red is the half of 255, means is 127,5. The radius from green is the half from 255, means is 127,5. If these two contributions are put together, we get 255. So at least (by full illumination) no information (defined by the value 255) is lost.

Our three-compartment model involves an intrinsic feature, which mirrors this example: whatever are the values of both opponent colors, the result for both (modulated) colors, or more physically spoken, the values of the oscillator will never leave the defined range between [0–255]. For that the color space of Figure 2 is a linear one.

As shown in theory, out of the middle colors the spectral colors are modulated. With this trick nature response to the occurrence of different receptors, acting in different bands of the electromagnetic spectrum. So at least the dominance of one of them leads to a kind of bifurcation out of the middle color. This bifructutation points leads to a categorization, that the dominant color is ON (the on-path neuron in the retina is activated) and the subdominant color is OFF (the off-path neuron in the retina is activated).

That this bifurcation cannot follow a simple plus-minus-calculation now seems clear, as otherwise minus values or values larger than 255 would be possible. The practical result for robot vision at the end is, that the resulting colors of the modulation will be totally different, as in common systems.

Some technical questions:

What’s an edge of contour?

It’s the change from one categorization (ON, OFF, no modulation) to another categorization.

What’s a plane?

It’s the no change of a categorization.

Is the dimension of the receptive fields are limited?

No, they are not (except given by the number of neurons the anatomical structures of the retina). Possible will that be, as the gab-junctions produce a short cut of the different receptor potentials (what should not be confused with the calculation of an average potential.)

3. Methods and results

The anatomical structure of the first 3 layers is well investigated. So, we know that in the fovea centralis every sensor has its own ON and OFF bipolar cell where in the periphery of the retina a large number of receptors are innervating single ON and OFF bipolar cells. In the result we can only see really scarf with the fovea centralis.

So at least we have minimum 2 kinds of receptive field dimensions. If we look at the horizonal and amacrine cells we see otherwise, that their receptive field seems some kind of flexible, depending on the momentary illumination, scene vigilance and the presents of accelerated objects.

This on mind we started our experiments with our new model by variating the inner and outer receptive field dimensions (the center and surround dimensions of the receptive fields.)

As expected, for the detection of contours the sharp der contours varies as shown in Figure 3. As well come side effect noisy fluctuations in the picture disappears. Both effects, and that’s what’s really new are totally independent form the illumination of the receptive fields as long as we have a difference on 1 between the opponent receptor potentials in the 3-K-model.

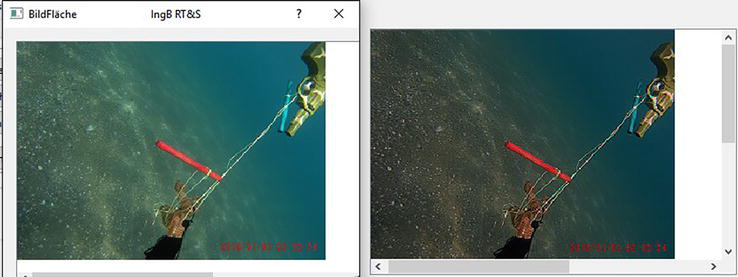

Figure 3.

Sown is here a toy-array in 1.5 m deep. In addition to the considerable increase in contrast, it is noticeable that the colors appear stronger and the seabed is colored completely differently. The image was taken at dusk and the colors correspond to human sensory impressions after filtering.

And what happened with the colors of a scene? They are modulated in a manner, that the contours became sharper and colors are restored. What means restored? As we have leaned not the sensor signal itself wears now the information, it wears the power to modulate its opponents only.

So, color can change dramatically. Our camera picture where the moon is silver has now, after filtering, a golden moon - surely only if the moon was golden in the truth. At least that means that the “true colors” are code in the camera picture somewhere and that the 3-k-model can decode them. If you transfer this knowledge to the biological receptors in the retina, it means that they can also be primitive as technical receptors, i.e., largely non-adaptive and with a fixed characteristic curve in terms of the intensity curve.

So at least, the color or receptors detect are not the color we see. And technical spoken even primitive cameras can see the world how we see it. Poor mobile phones which more or less expensive camera equipment, trying to simulate what a bionic symmetry operation does.

But now let us see examples. All pictures shown were taken with cheap cameras from various manufacturers (20–80 €). No lighting conditions were imposed, so all images were taken in natural conditions. On left hand side are shown the original camera picture, on right hand side the processed pictures. The processing time of all pictures are 30 msec. The filter code itself is written in the programming language C and has a size of 389 k. licensed additional libraries were not used because the filter technology is a stand-alone application.

The first images were taken by a simple underwater camera at different depths. What interested us most here were the different lighting conditions and, above all, suspended matter, which artificial lighting would appear to be rather disturbing (Figures 4–7).

Figure 4.

In this image the seabed was photographed at a depth of 2 m during the day. Once again you can see the clear increase in contrast and a brightening of the image, since according to the 3 K model the color modulations lead to an increase in the color components in the RGB space.

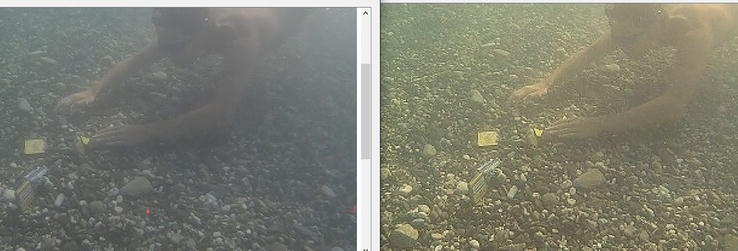

Figure 5.

This effect becomes even clearer when there are objects in the room (here the diver at a depth of 3 meters). In addition, the filtering is not significantly disrupted by suspended matter in the sea.

Figure 6.

Shown here is part of a so-called “ghost net” in a dep of 12 m, i.e., a lost fishing net. Unfortunately, these pose an immediate danger, especially to marine mammals, which is why they are on the list of human artifacts that urgently need to be recovered. In order to recover them, it is extremely important to clearly lift them from the ground, even when there is heavy vegetation (like here).

Figure 7.

Here an aircraft bomb from the Second World War is shown at a depth of 40 meters. For these objects, details regarding shape, markings, degree of corrosion and possible damage are of particular interest as they define the immediate threat to the marine habitat. The difference to the previous images is that the bionic filters also enable artificial after-lighting without causing color artifacts.

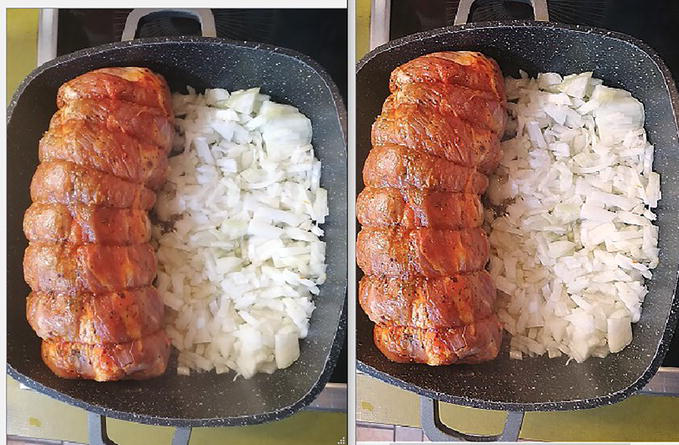

Let us move on to more everyday examples. Below are three pictures from the food industry. The aim of these shots was to achieve the highest possible sharpness as well as the natural colors and color gradients from existing photos (Figures 8–10).

Figure 8.

Shown here is a typical German dish: Roast pork stewed in onions and in a beer soup. What is noticeable in the original is that at least the bulbs appear unnaturally white for a steaming process. In addition, the reflections on the roast in the original image are rather unnaturally weak. Both points are eliminated by using bionic filters.

Figure 9.

The same effects can be seen in the finished dish. In addition, the potatoes no longer appear raw but actually cooked, as they have taken on the color of boiled potatoes. Due to the three-dimensional highlighting of objects present in the image, you can now easily see in the filtered image that there is a bean fruit at the bottom edge, as it stands out clearly from the pattern on the plate.

Figure 10.

Lastly, the pork before it was on the plate. Although the coloring is more or less not further optimized here thanks to the good lighting of the original photo through the bionic filters, the details of the pig and the grill - especially the charcoal - are optimized. This makes the image appear more natural and realistic.

As you can see, the bionic filter primarily creates a contrast that not only makes the image itself appear sharper, but also creates a quasi-3-dimensional impression. This also explains why some images appear more plastic to us than others. It is the special representation of edges and color transitions that make objects appear 3-dimensional in our brain.

4. Conclusions

Using examples, we have shown that if you use a potential-oriented model of the opponent color theory, you can achieve a completely new image processing system that is more similar to the human processing of light senses than was previously known. But the actual insight from this bionic image processing is more profound. It is not complex information steps or those that only take place in the brain areas that lead to a three-dimensional and true-color experience of optical impressions, but rather this information transduction takes place in the first two layers of the retina. In the last few decades, we have already understood that our eye is not just a camera but - in its function as an upstream brain - a kind of supercomputer, but what is new is that this computer uses a completely different way of processing information than we are used to are. He simply follows a different logic, which enables him to work out the optimum from even poor sensory image recordings with just one symmetry operation.

Acknowledgments

Figures 5–7 were collected as part of the EU-funded maritime research project “EROVMUS” and are therefore subject to extra copy rights.

References

- 1.

Hering E. Outlines of a Theory of the Light Sense. Cambridge, Mass: Harvard University Press; 1964 - 2.

Conway BR, Malik-Moraleda S, Gibson E. Color appearance and the end of Hering’s opponent-colors theory. Cell. 2023; 27 (9):791-804. DOI: 10.1016/j.tics.2023.06.003 [Accessed: August 31, 2023] - 3.

Werner JS, Walraven J. Effect of chromatic adaptation on the achromatic locus: The role of contrast, luminance and background color. Vision Research. 1982; 22 (8):929-943 - 4.

Stockman A, Macleod DI, Ajohnson NE. Spectral sensitivities of the human cones. Journal of the Optical Society of America A. 1993; 10 (12):2491-2521 - 5.

Shinomori Spillmann K, Werner J. S-cone signals to temporal off-channels: possible asymmetrical connections to postreceptoral chromatic mechanisms. Vision Research. 1998; 39 (1):39-49 - 6.

Jameson D, Hurvich LM. Some quantitative aspects of an opponent-colors theory. I. Chromatic responses and spectral saturation. Journal of the Optical Society of America. 1955; 45 :546-552 - 7.

Larimer J, Krantz D, Cicerone C. Opponent process additivity-II. yellow/blue equilibria and non-linear models. Vision Research. 1975; 15 :723-731 - 8.

Elzinga C, de Weert CMM. Nonlinear codes for the yellow/blue mechanism. Vision Research. 1984; 24 :911-922 - 9.

Long Z, Qiu X, Chan CLJ, Sun Z, Yuan Z, Poddar S, et al. A neuromorphic bionic eye with filter-free color vision using hemispherical perovskite nanowire array retina. Nature Communications. 2023; 14 (1):1972 - 10.

Gu L et al. A biomimetic eye with a hemispherical perovskite nanowire array retina. Nature. 2020; 581 :278-282 - 11.

Sarkar P, Dewangan O, Joshi A. A review on applications of artificial intelligence on bionic eye designing and functioning. Scandinavian Journal of Information Systems. 2023; 35 (1):1119-1127 - 12.

Paraskevoudi N, Pezaris JS. Eye movement compensation and spatial updating in visual prosthetic mechanisms, limitations and future directions. Frontiers in Systems Neuroscience. 2019; 12 :73 - 13.

Zhang H, Lee S. Robot bionic vision technologies: A review. Applied Sciences. 2022; 12 (16):7970 - 14.

Reuter M, Bohlmann S. Biological inspired image analysis for medical applications. Biomimetics and Bionic Applications with Clinical Applications. 2021:211-225